Click here and press the right key for the next slide (or swipe left)

also ...

Press the left key to go backwards (or swipe right)

Press n to toggle whether notes are shown (or add '?notes' to the url before the #)

Press m or double tap to slide thumbnails (menu)

Press ? at any time to show the keyboard shortcuts

objects

causes

colours

words

non-verbal communications

minds

actions

\section{Knowledge of Mind}

challenge

Explain the emergence in development

of mindreading.

The challenge is to explain the developmental emergence of mindreading.

Let me explain.

\textit{Mindreading} is

the process of

identifying mental states and purposive actions

as the mental states and purposive actions of a particular subject.

Researchers sometimes use the term ‘theory of mind’.

‘In saying that an individual has a theory of mind, we mean that the individual imputes mental states to himself and to others’

\citep[p.\ 515]{premack_does_1978}

(Premack & Woodruff 1978: 515)

So, to be clear about the terminology, to have a theory of mind is just to be able to

to mindread,

that is, to identify mental states and purposive actions

as the mental states and purposive actions of a particular subject.

So the challenge is to explain the emergence of mindreading.

You know (let's say) that Ayesha belives Beatrice is in the library.

Humans are not born knowing individuating facts about others' beliefs.

How do they come to be in a position to know such facts?

Meeting this challenge initially seems simple.

But, as you'll see, we quickly end up with a puzzle.

I think this puzzle requires us to rethink what is involved in having a conception of

the mental.

I shall focus on awareness of others' beliefs to the exclusion of other mental states.

There's no theoretical reason for this; it's just a practical thing.

And what we learn about belief will generalise to other mental states.

belief

How can we test whether someone is able to ascribe beliefs to others?

Here is one quite famous way to test this, perhaps some of you are even aware of it

already.

Let's suppose I am the experimenter and you are the subjects.

First I tell you a story ...

‘Maxi puts his chocolate in the BLUE box and leaves the room to play. While he is away (and cannot see), his mother moves the chocolate from the BLUE box to the GREEN box. Later Maxi returns. He wants his chocolate.’

In a standard \textit{false belief task}, `[t]he subject is aware that he/she and

another person [Maxi] witness a certain state of affairs x. Then, in the absence of

the other person the subject witnesses an unexpected change in the state of affairs

from x to y' \citep[p.\ 106]{Wimmer:1983dz}. The task is designed to measure the

subject's sensitivity to the probability that Maxi will falsely believe x to obtain.

I wonder where Maxi will look for his chocolate

‘Where will Maxi look for his chocolate?’

Wimmer & Perner 1983

Two models of minds and actions

Maxi wants his chocolate.

Maxi wants his chocolate.

Maxi believes his chocolate is in the blue box.

Maxi’s chocolate is in the green box.

Maxi will look in the blue box.

Maxi will look in the green box.

How can we test whether someone is able to ascribe beliefs to others?

Here is one quite famous way to test this, perhaps some of you are even aware of it

already.

Let's suppose I am the experimenter and you are the subjects.

First I tell you a story ...

‘Maxi puts his chocolate in the BLUE box and leaves the room to play. While he is away (and cannot see), his mother moves the chocolate from the BLUE box to the GREEN box. Later Maxi returns. He wants his chocolate.’

In a standard \textit{false belief task}, `[t]he subject is aware that he/she and

another person [Maxi] witness a certain state of affairs x. Then, in the absence of

the other person the subject witnesses an unexpected change in the state of affairs

from x to y' \citep[p.\ 106]{Wimmer:1983dz}. The task is designed to measure the

subject's sensitivity to the probability that Maxi will falsely believe x to obtain.

I wonder where Maxi will look for his chocolate

‘Where will Maxi look for his chocolate?’

Wimmer & Perner 1983

Here's the really surprising thing.

Children do really badly on this until they are around four years of age.

And they seem to develop the ability to pass this task only gradually, over months or years.

(There's something else that isn't surprising to most people but should be: adult humans not only nearly always provide the answer we're calling 'correct': they also believe that there is an obviously correct answer and that it would be a mistake to give any other answer. I'll return to this point later.)

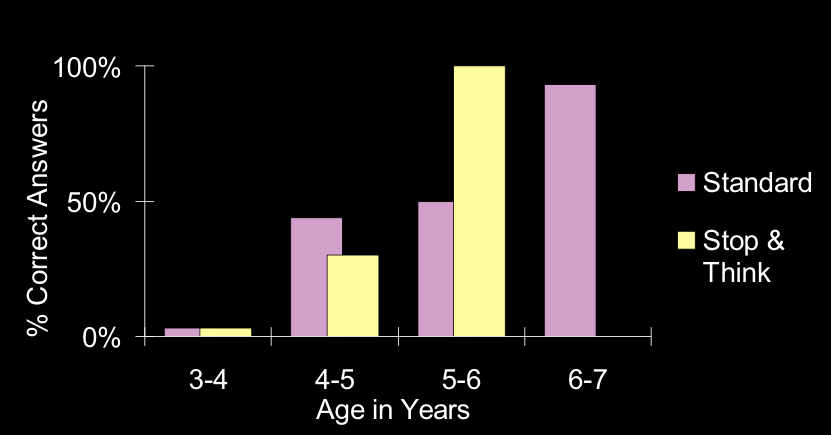

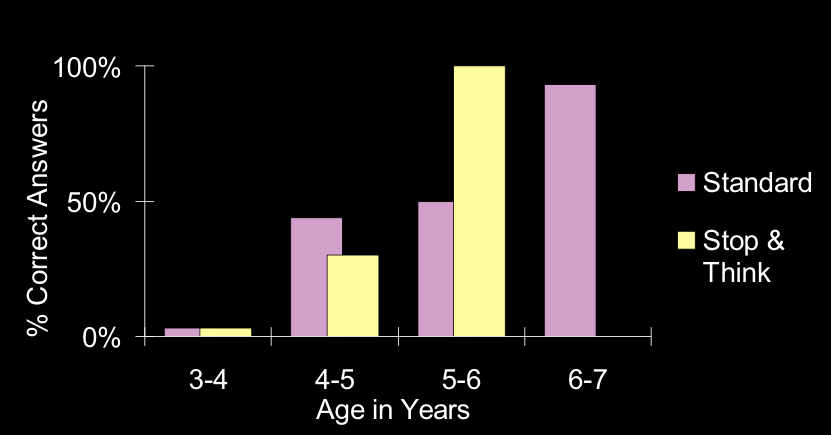

Wimmer & Perner, 1983

(NB: The figure is not Wimmer & Perner's but drawn from their data.)

So in terms of my two models,

it looks like 3 year olds are relying on a fact model

and only the older children are relying on a belief model.

Two models of minds and actions

Maxi wants his chocolate.

Maxi wants his chocolate.

Maxi believes his chocolate is in the blue box.

Maxi’s chocolate is in the green box.

Maxi will look in the blue box.

Maxi will look in the green box.

There's been some stuff in the press recently about bad science, mainly some dodgy methods and failures to replicate.

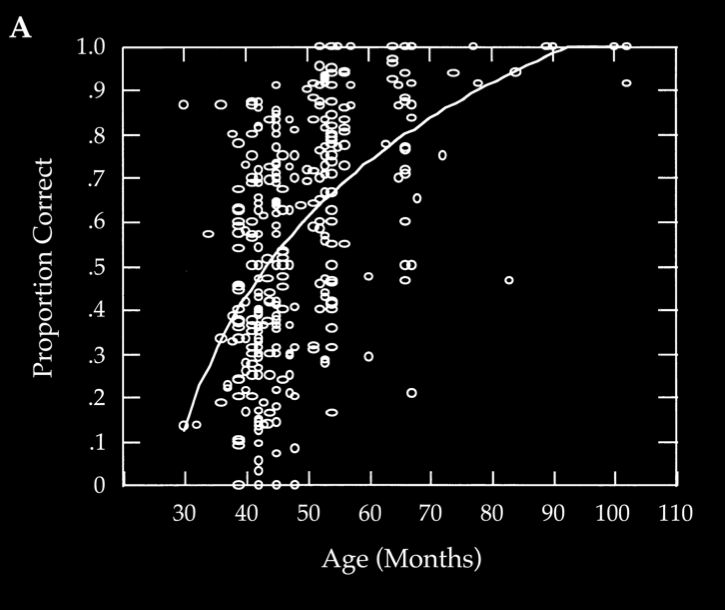

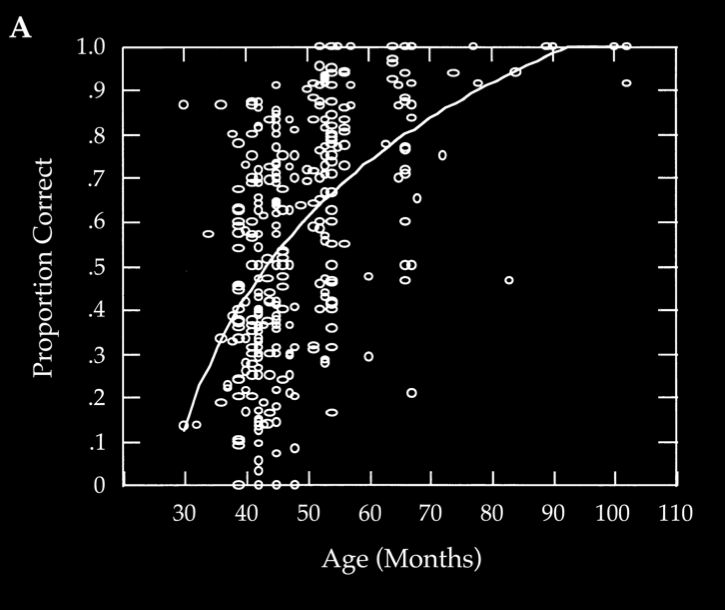

So you'll be pleased to know that a meta-study of 178 papers confirmed Wimmer & Perner's findings.

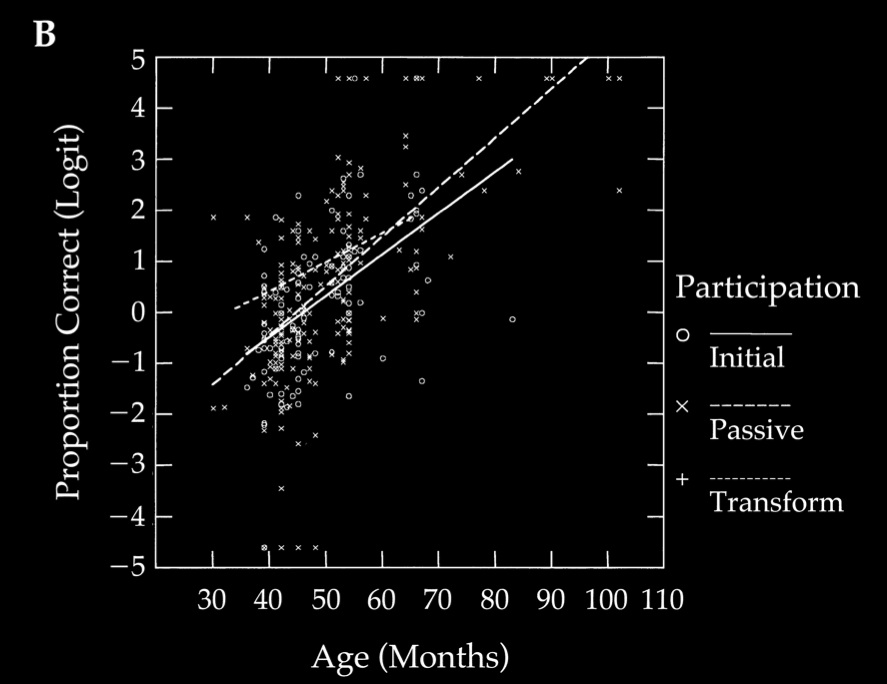

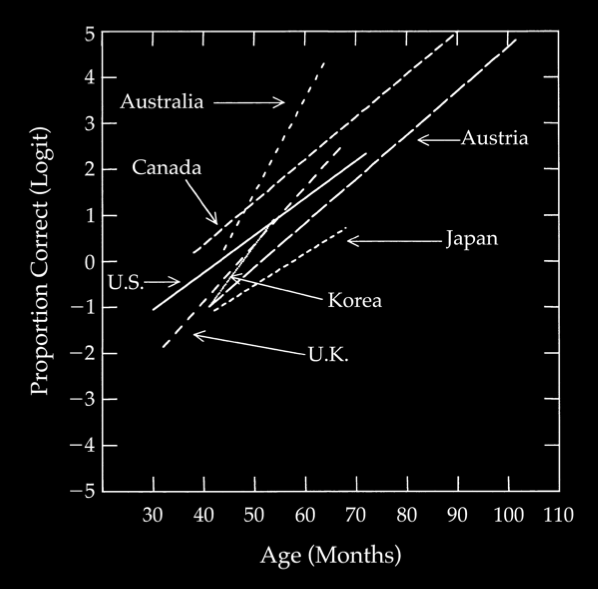

Wellman et al, 2001 figure 2A

Now there is clearly some variation here.

That's because different researchers implemented different versions of the original task.

We can use the meta-analysis of these experiments as a shortcut to finding out what sorts of factors affect children's performance.

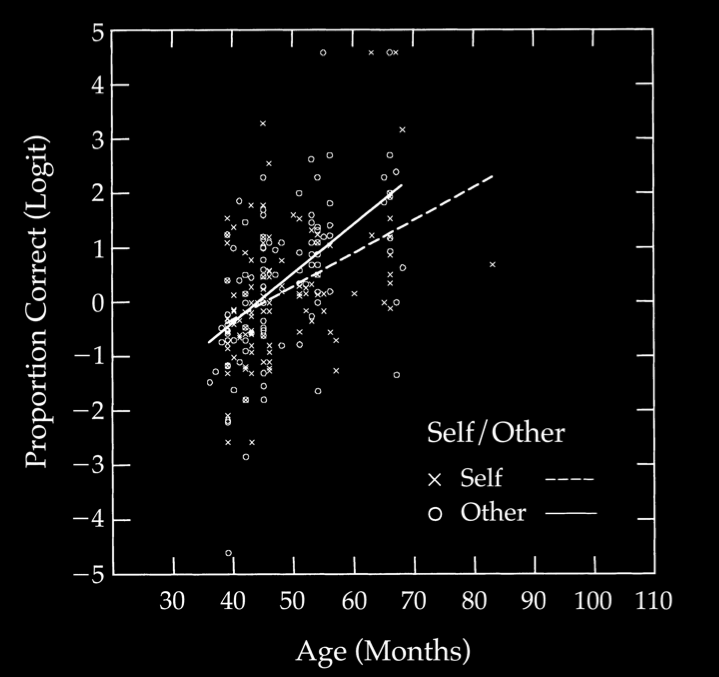

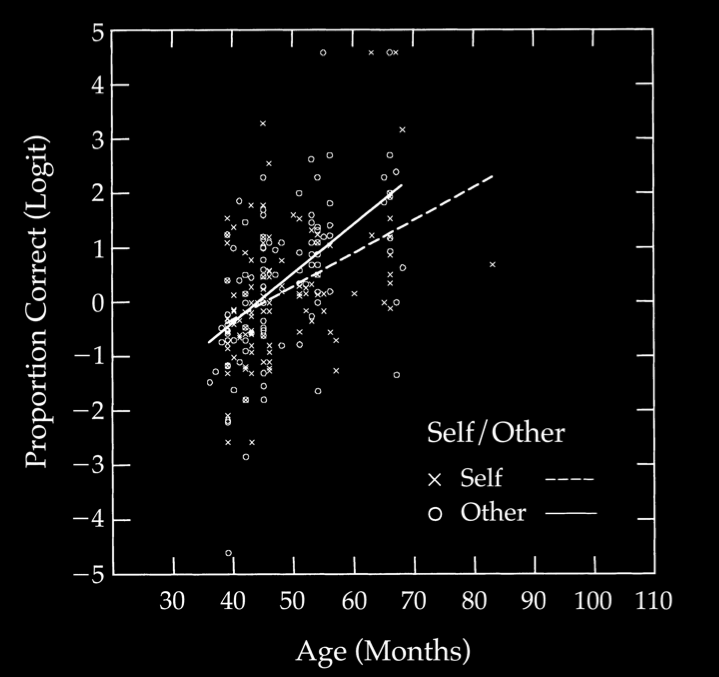

One factor that seems to make hardly any difference is whether you ask children about others' beliefs or their own beliefs.

To repeat, you get essentially the same results whether you ask children about others' beliefs or their own beliefs.

Children literally do not know their own minds.

Wellman et al, 2001 figure 5

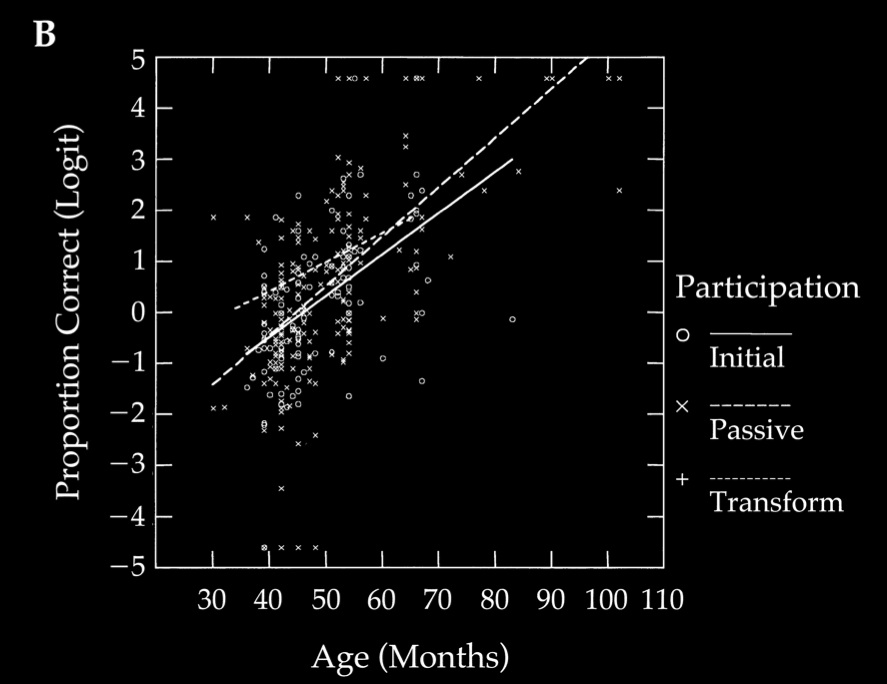

What happens if we involve the child by having her interact with the protagonist?

The task becomes easier for children of all ages, but the transition is essentially the same

(participation does not interact with age \citealp[pp.\ 665-7]{Wellman:2001lz}).

Wellman et al, 2001 figure 6

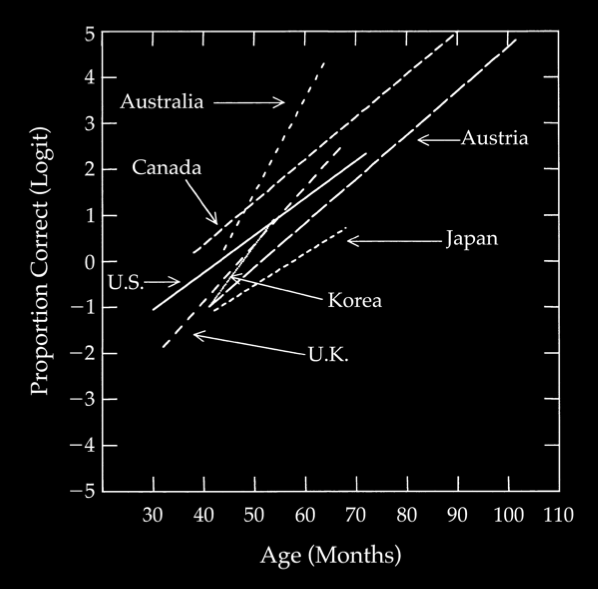

Finally, although there are some cultural differences, you get the same transition in seven diferent countries.

Wellman et al, 2001 figure 7

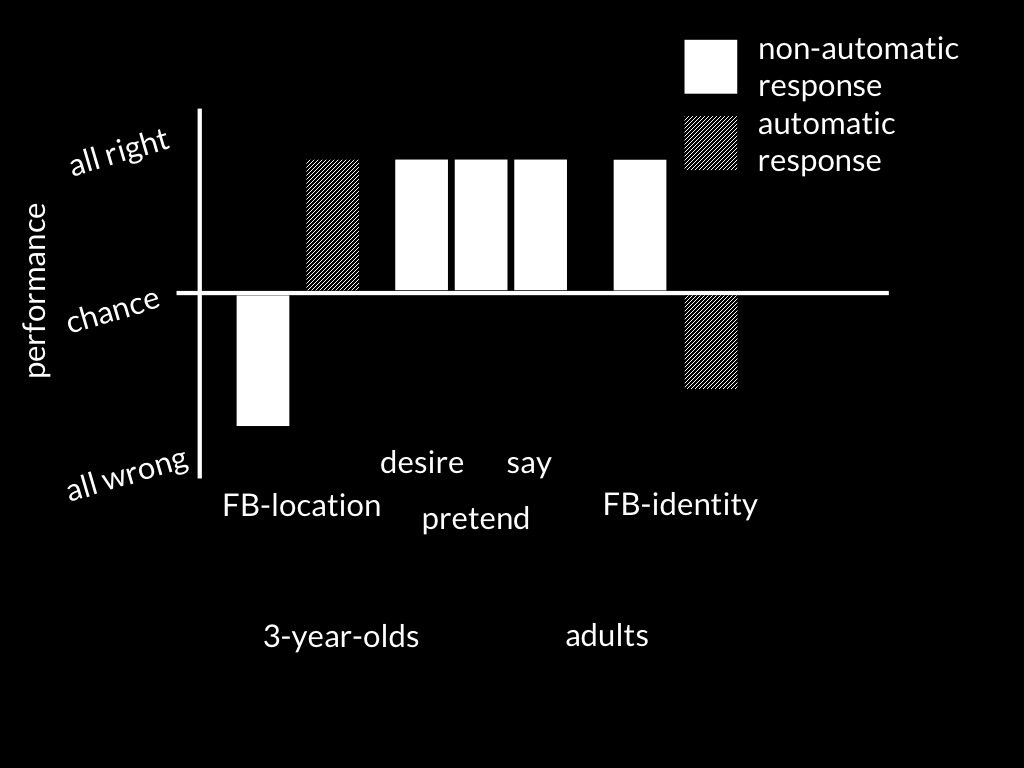

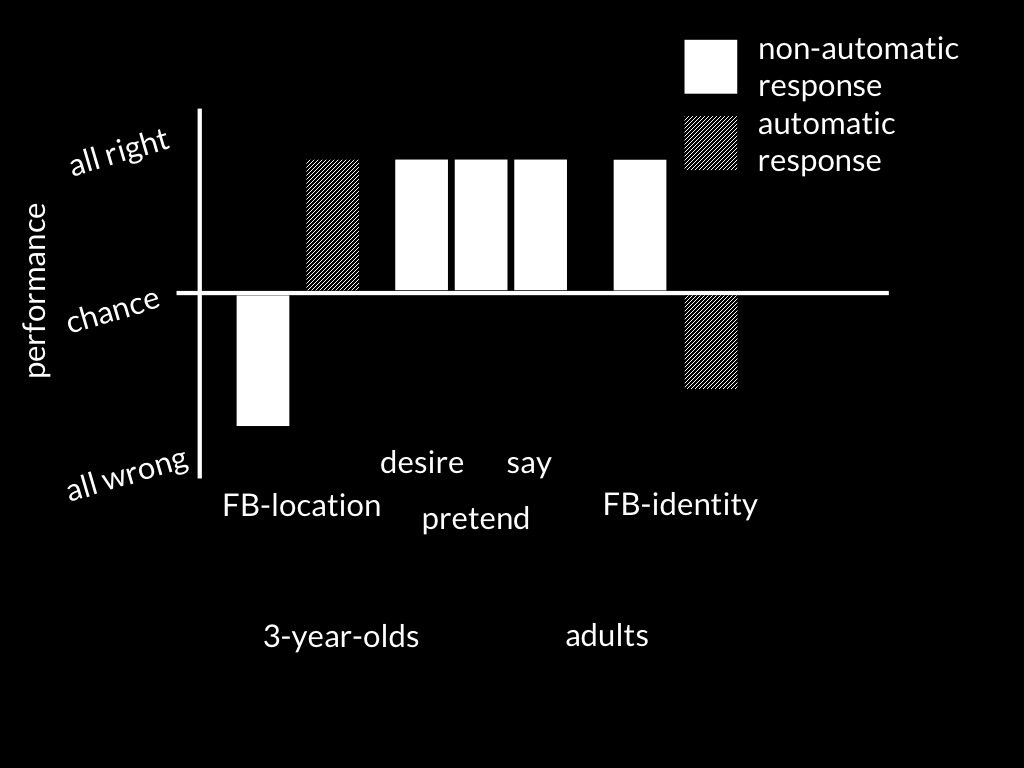

3-year-olds’ performance is not simply the result of performance factors.

After all, they succeed on structurally similar tasks about

pretence, saying or desiring.

[ASIDE ON EF] Further, changing the EF demands of a FB task doesn’t affect performance,

and, conversely, nor do differences between cultures in EF affect FB

performance.

Consistently with this, success on FB predicts later social competence

independently of EF.

But then what about the developmental relation between EF and FB performance?

It's emergence not performance (?).

challenge

Explain the emergence in development

of mindreading.

So our challenge was to explain the emergence of mindreading.

At this point, up until around, it seemed quite straightforward to most researchers.

We seemed to know that children are unaware of mental states until around four years.

And a lot of studies looked at which factors affect their acquiring this awareness.

These studies showed that executive function, language and rich forms of social

interaction are all important.

All of this supported something like the story that Sellars tells in his famous Myth of

Jones.

How does mindreading emerge in development?

Sellars' Myth of Jones

*todo*: describe Sellars' myth; link to Gopnik theory theory idea.

but ...

But there was a big surprise in store for us.

Three-year-olds systematically fail to predict actions \citep{Wimmer:1983dz}

and desires \citep{Astington:1991kk} based on false beliefs; they similarly

fail to retrodict beliefs \citep{Wimmer:1998kx} and to select arguments

suitable for agents with false beliefs \citep{Bartsch:2000es}.

They fail some low-verbal and nonverbal false belief tasks

\citealp{Call:1999co,low:2010_preschoolers,krachun:2009_competitive,krachun:2010_new}; they fail whether the question concerns others' or their own (past)

false beliefs \citep{Gopnik:1991db}; and they fail whether they are

interacting or observing \citep{Chandler:1989qa}.

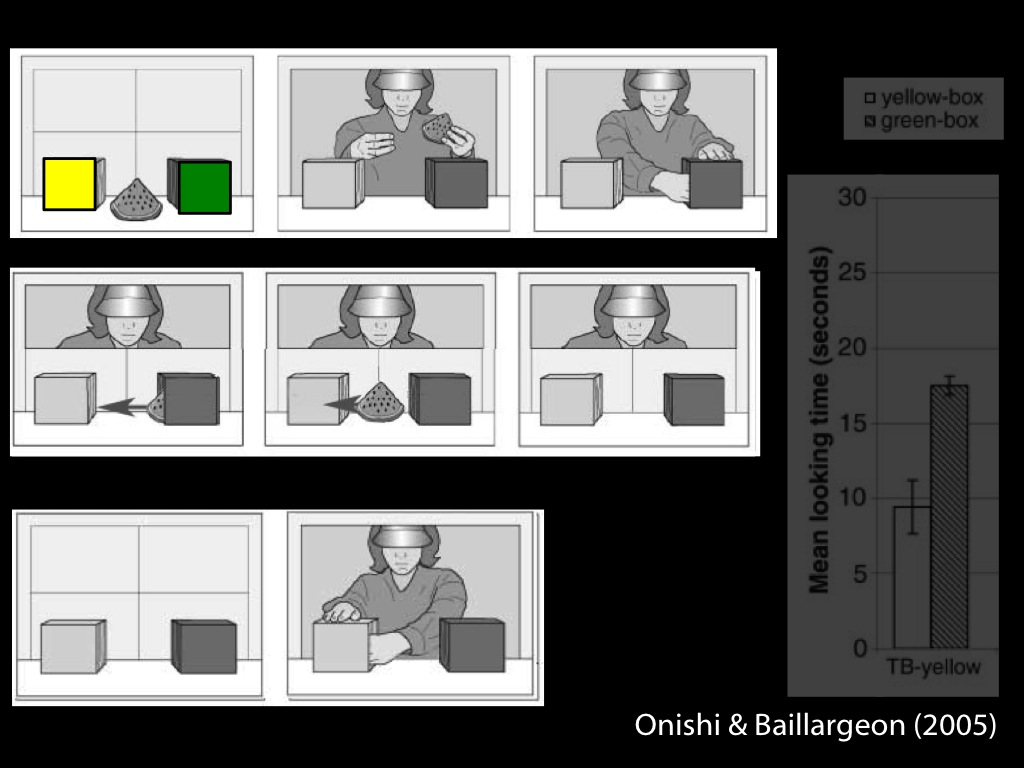

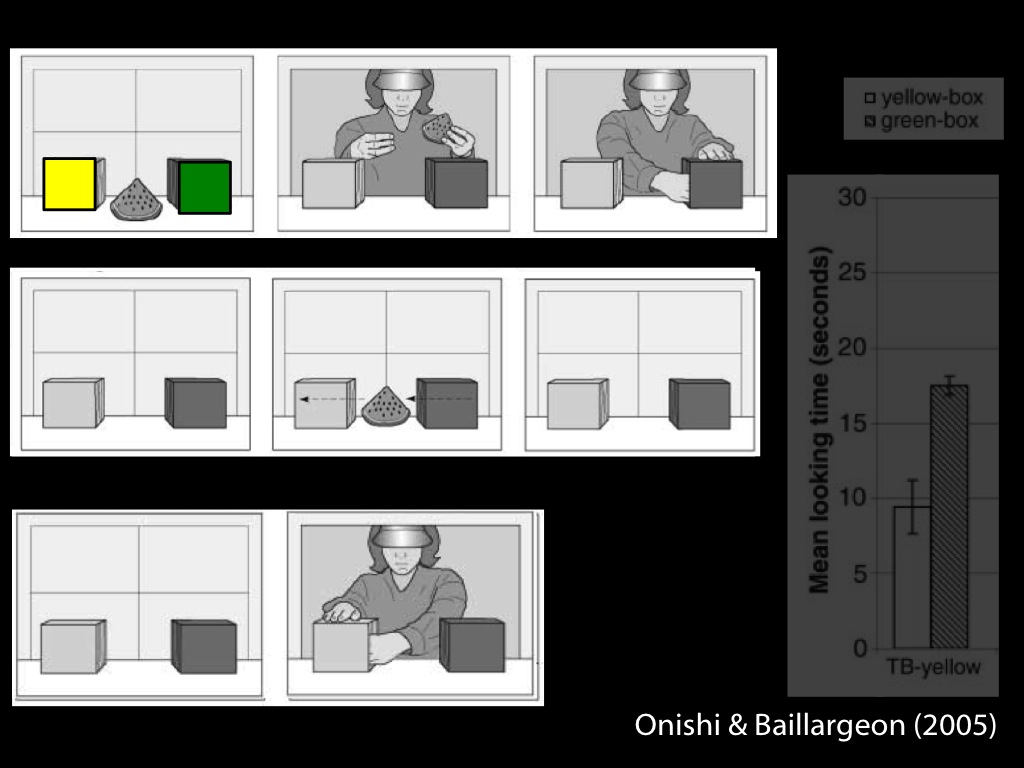

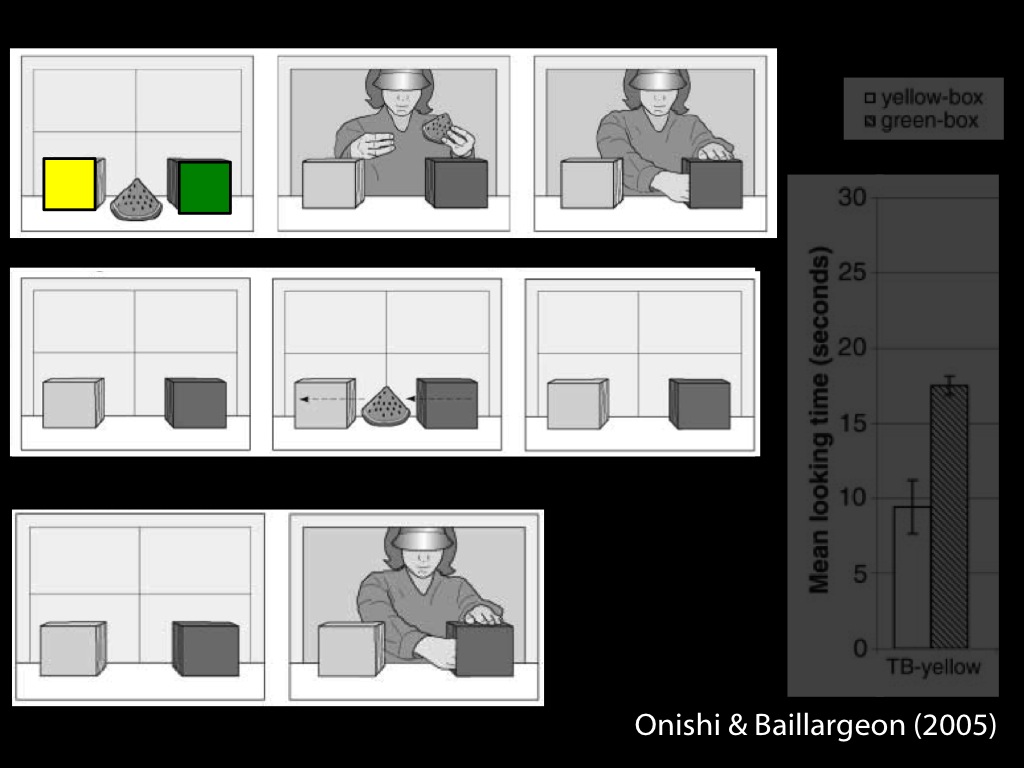

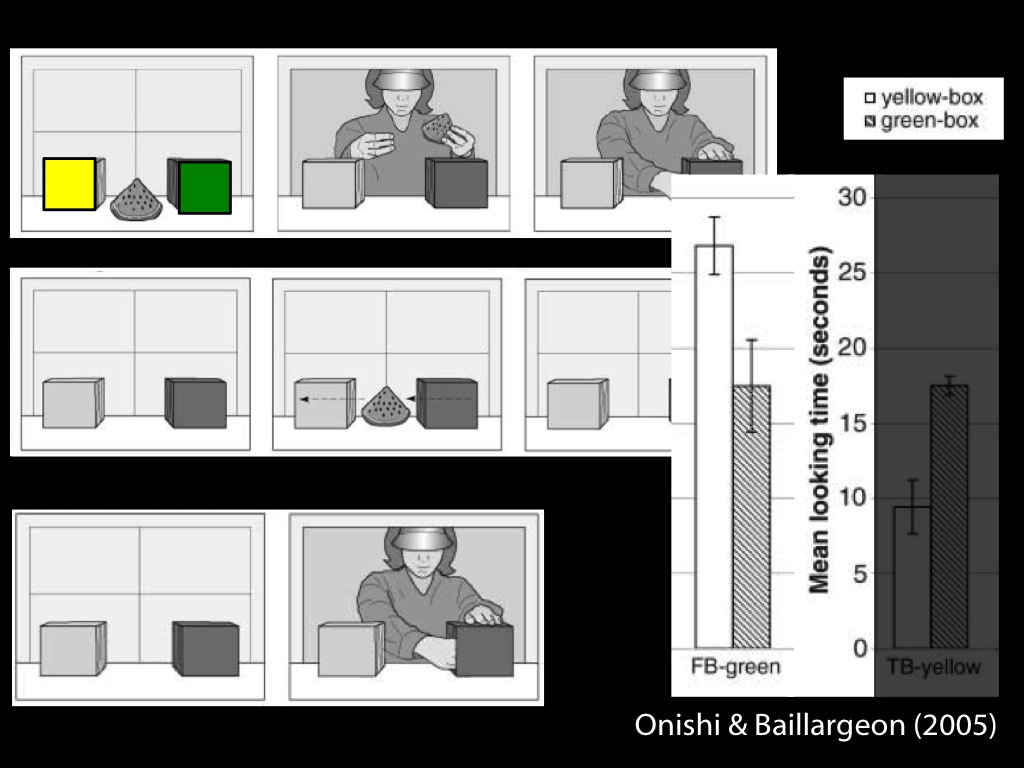

Infants Track False Beliefs

\section{Infants Track False Beliefs}

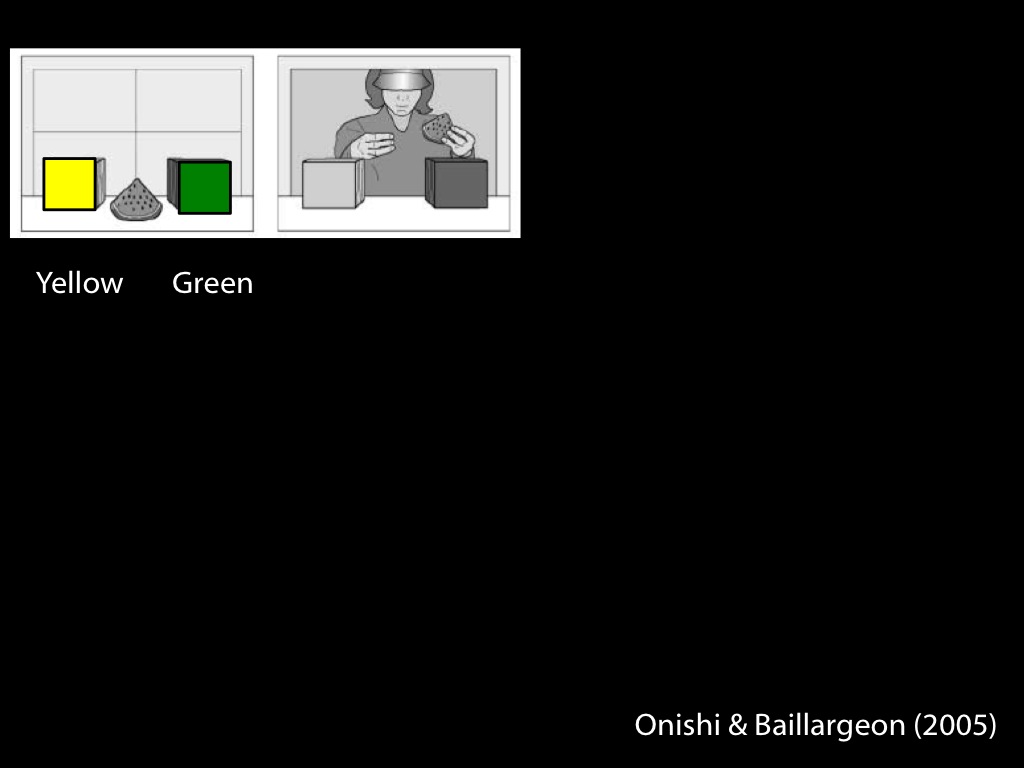

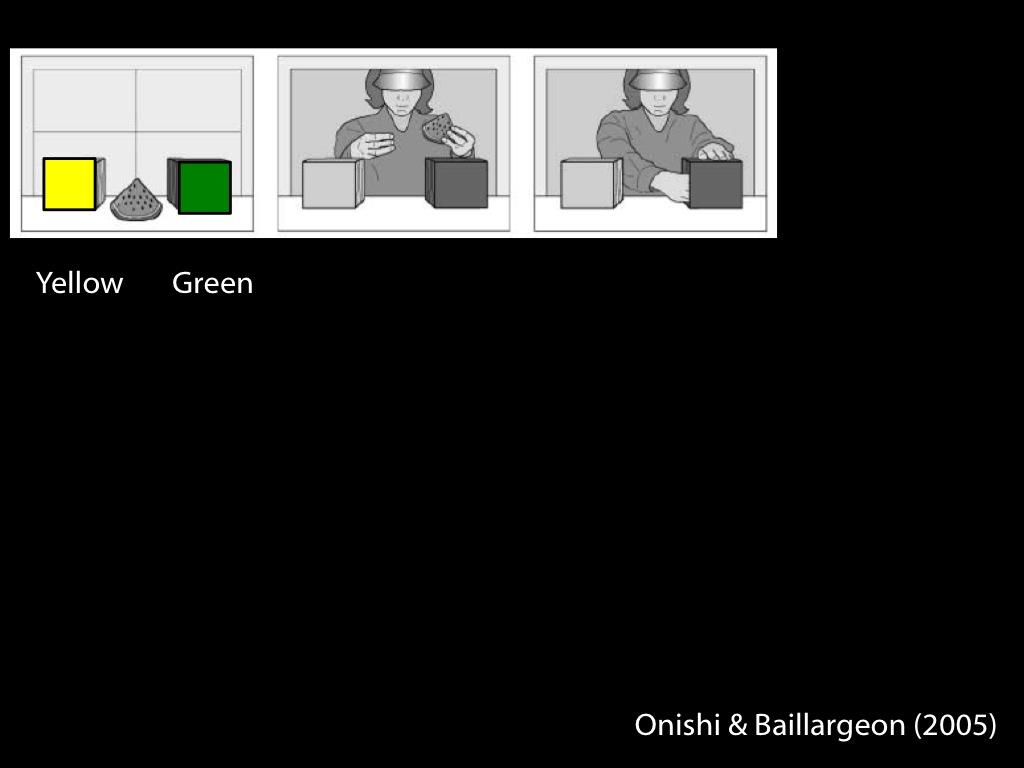

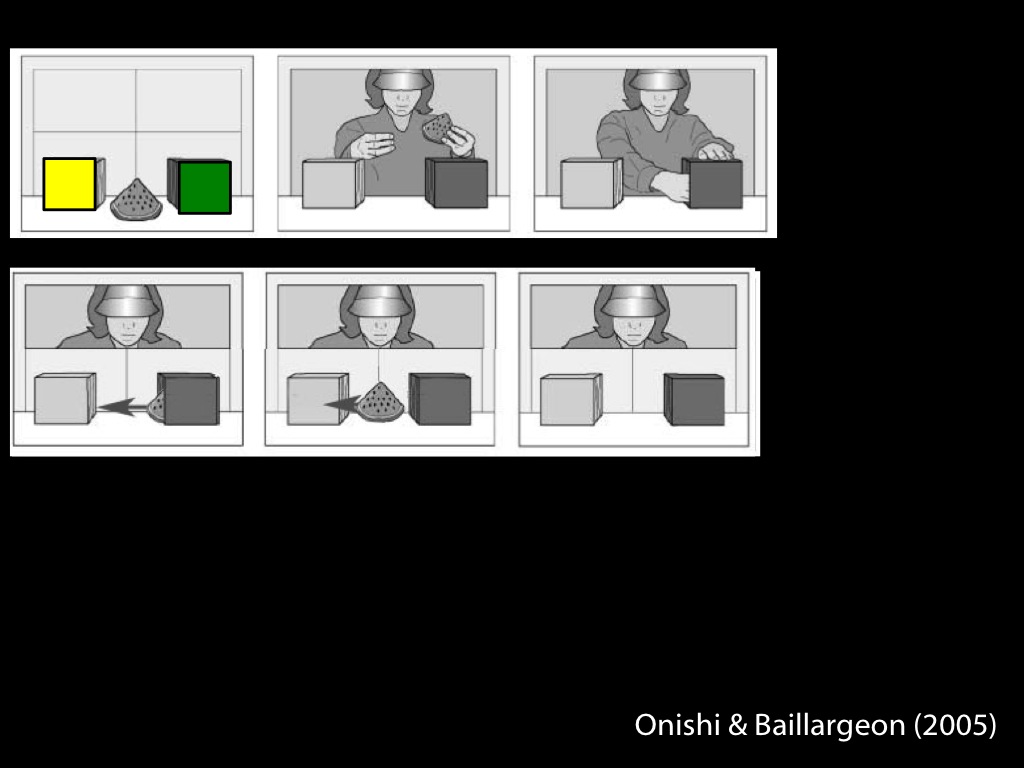

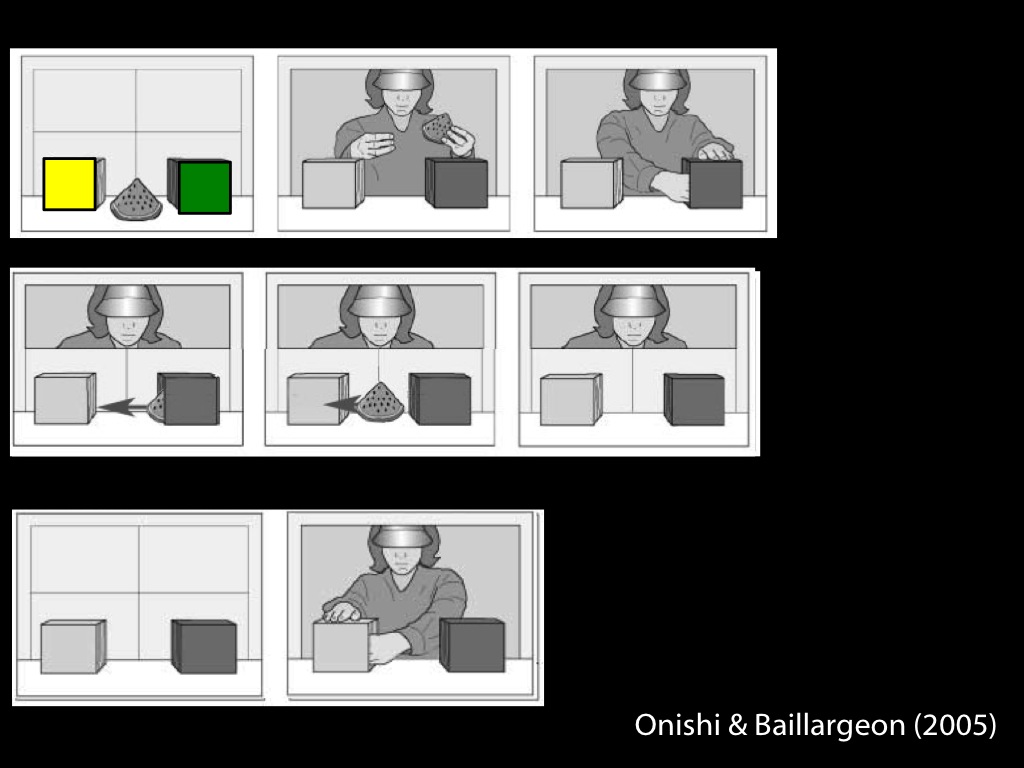

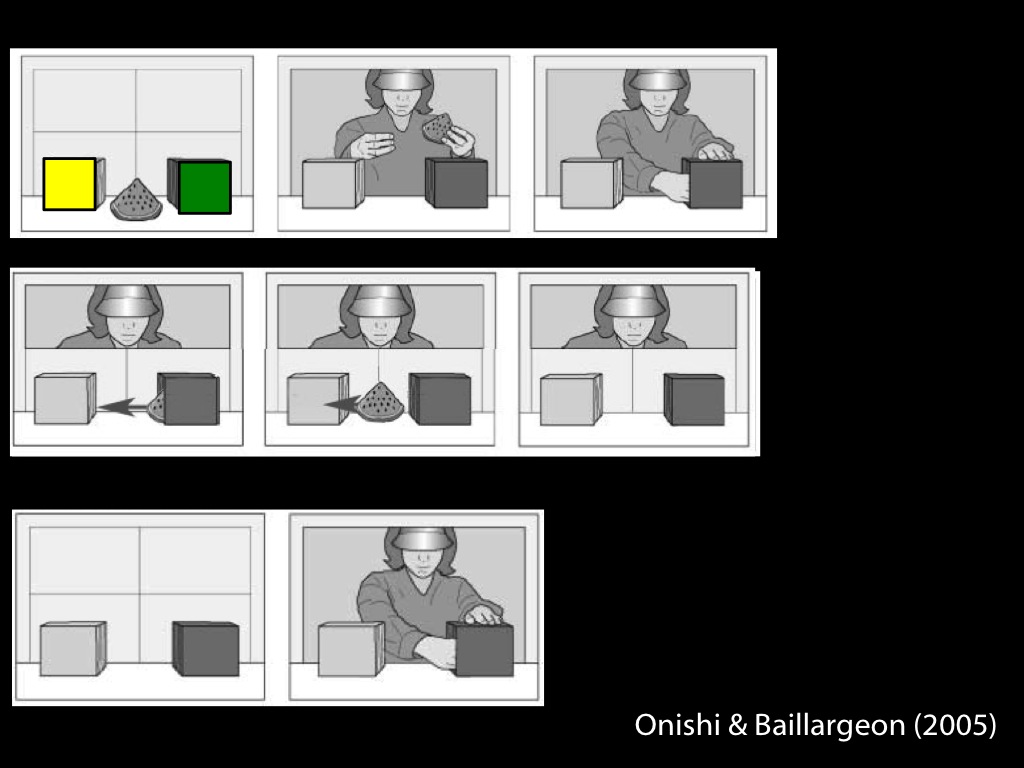

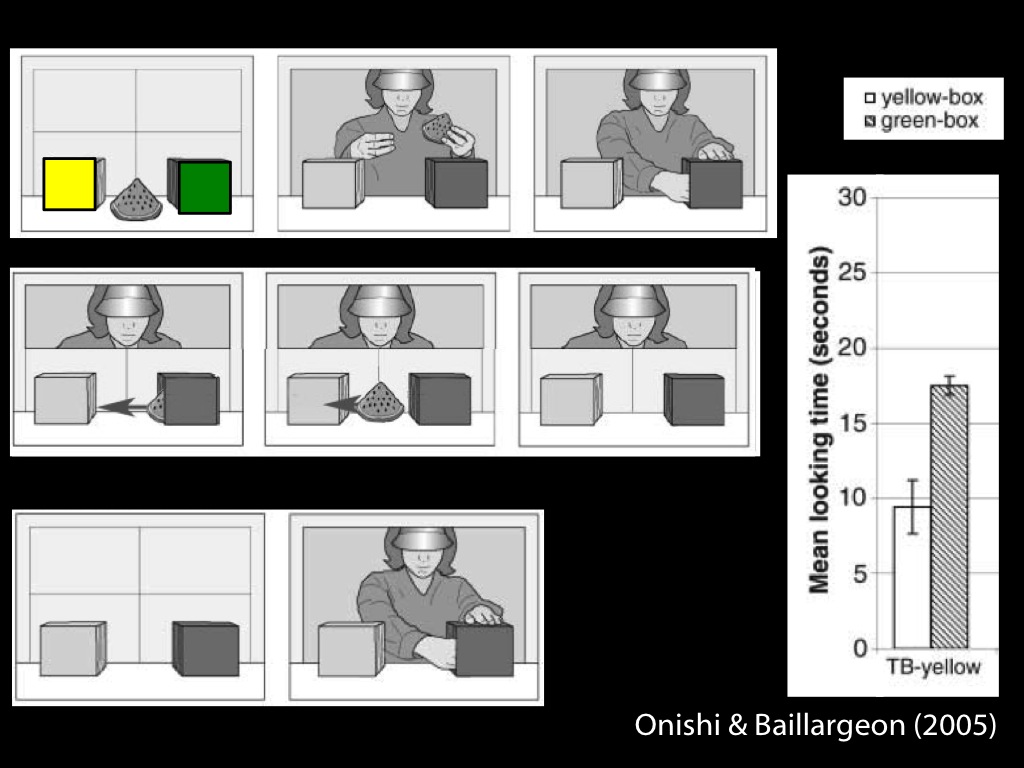

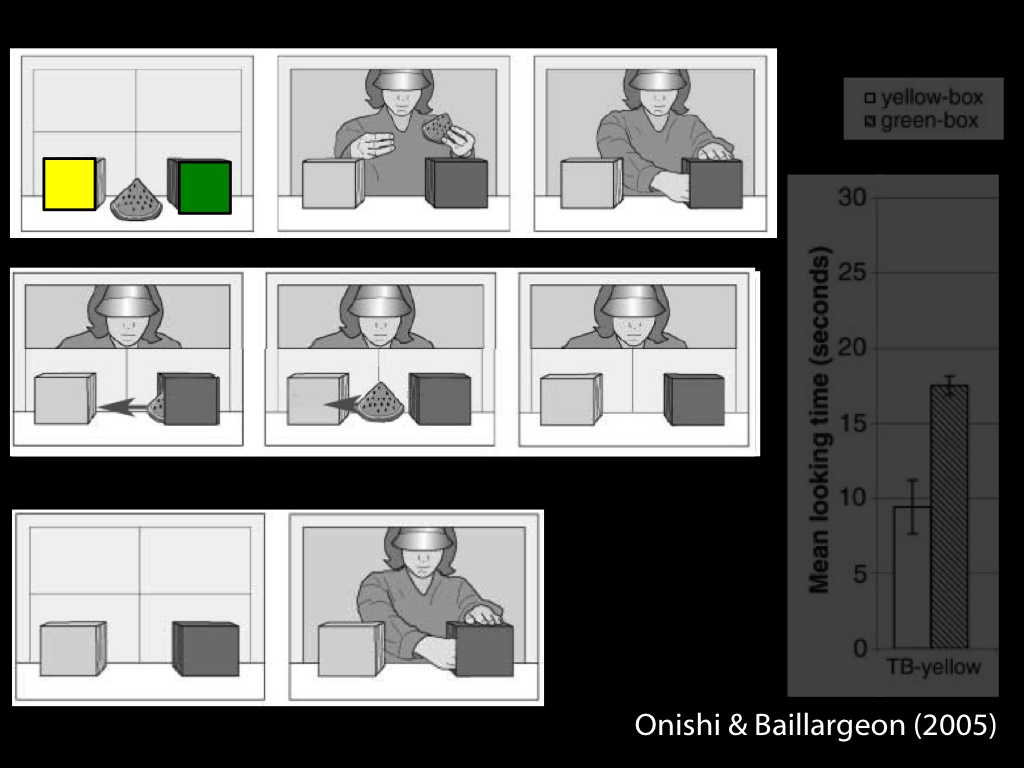

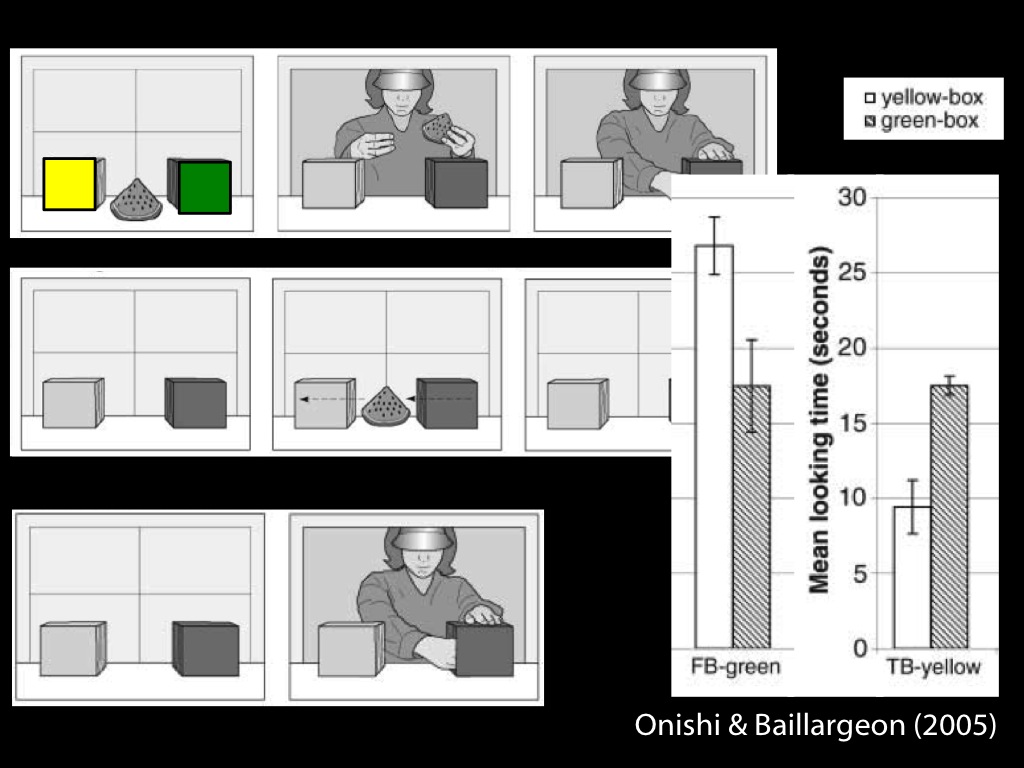

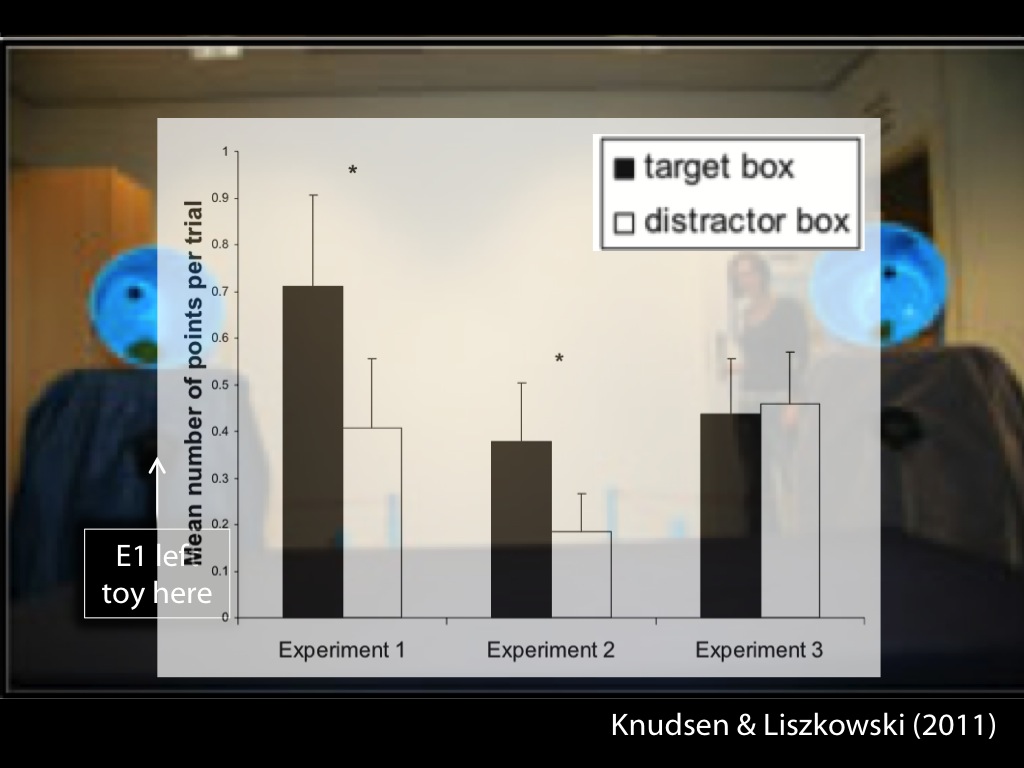

One-year-old children predict actions of agents with false beliefs about the

locations of objects \citep{Clements:1994cw,Onishi:2005hm,Southgate:2007js},

and about the contents of containers \citep{he:2011_false}, taking into

account verbal communication \citep{Song:2008qo,scott:2012_verbal_fb}.

They

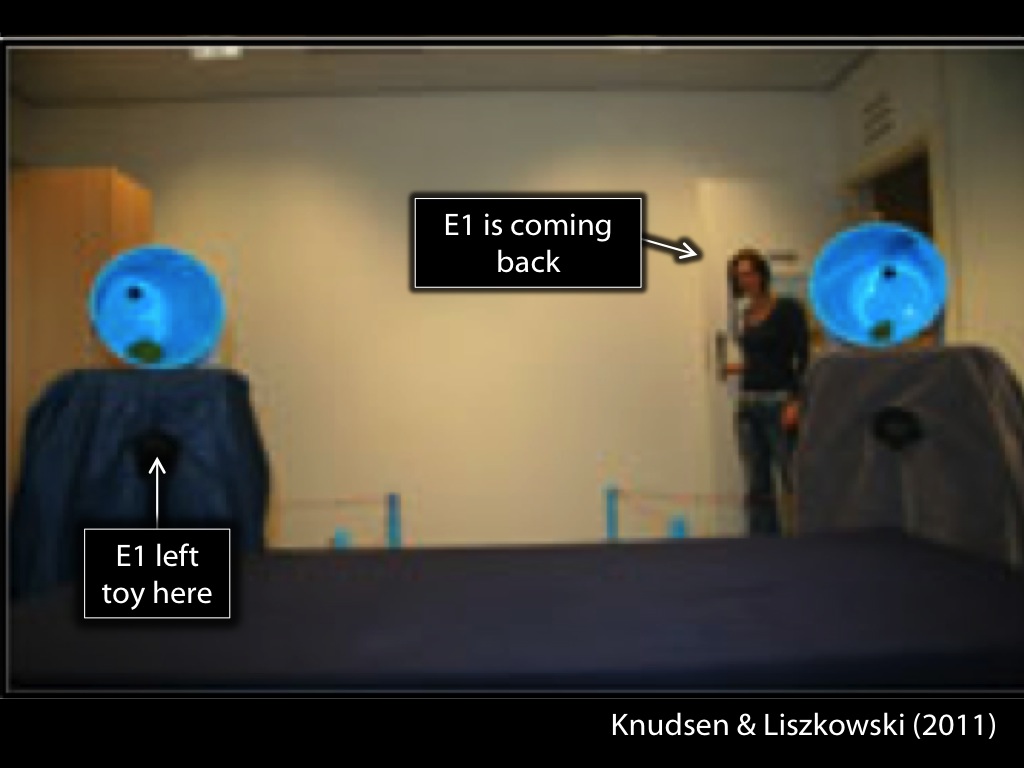

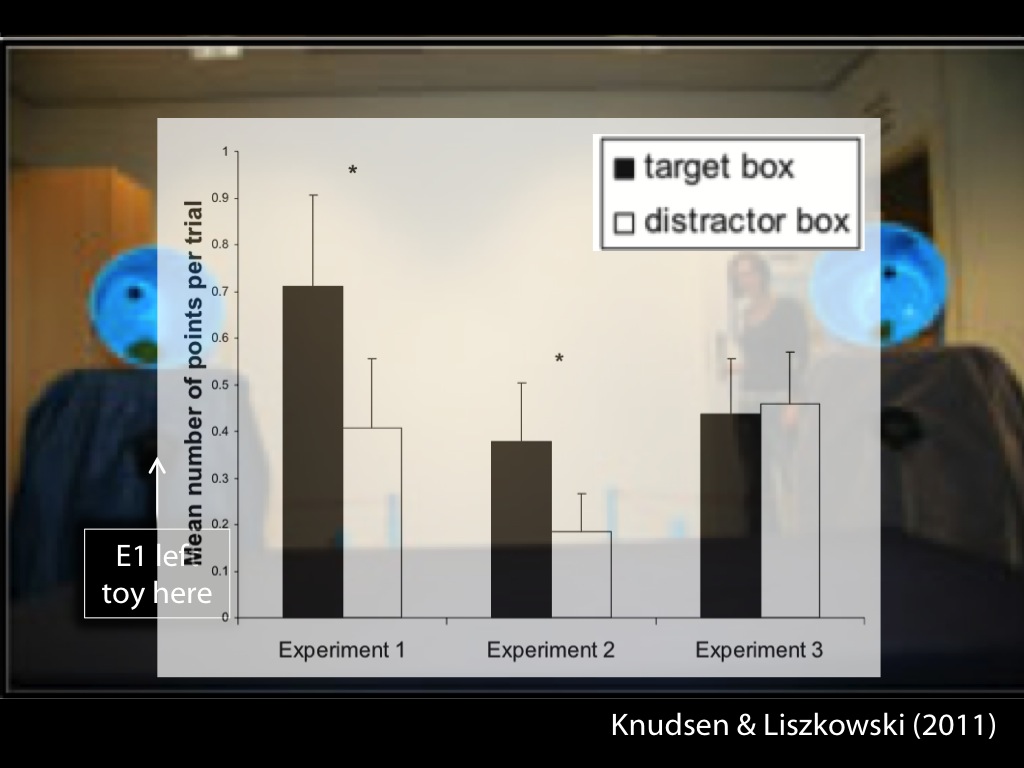

will also choose ways of helping \citep[]{Buttelmann:2009gy} and

communicating \citep{Knudsen:2011fk,southgate:2010fb} with others depending on

whether their beliefs are true or false. And in much the way that irrelevant

facts about the contents of others’ beliefs modulate adult subjects’ response

times, such facts also affect how long 7-month-old infants look at some

stimuli \citep[]{kovacs_social_2010}.

How can we test whether someone is able to ascribe beliefs to others?

Here is one quite famous way to test this, perhaps some of you are even aware of it

already.

Let's suppose I am the experimenter and you are the subjects.

First I tell you a story ...

‘Maxi puts his chocolate in the BLUE box and leaves the room to play. While he is away (and cannot see), his mother moves the chocolate from the BLUE box to the GREEN box. Later Maxi returns. He wants his chocolate.’

In a standard \textit{false belief task}, `[t]he subject is aware that he/she and

another person [Maxi] witness a certain state of affairs x. Then, in the absence of

the other person the subject witnesses an unexpected change in the state of affairs

from x to y' \citep[p.\ 106]{Wimmer:1983dz}. The task is designed to measure the

subject's sensitivity to the probability that Maxi will falsely believe x to obtain.

I wonder where Maxi will look for his chocolate

‘Where will Maxi look for his chocolate?’

Wimmer & Perner 1983

Recall the experiment that got us started.

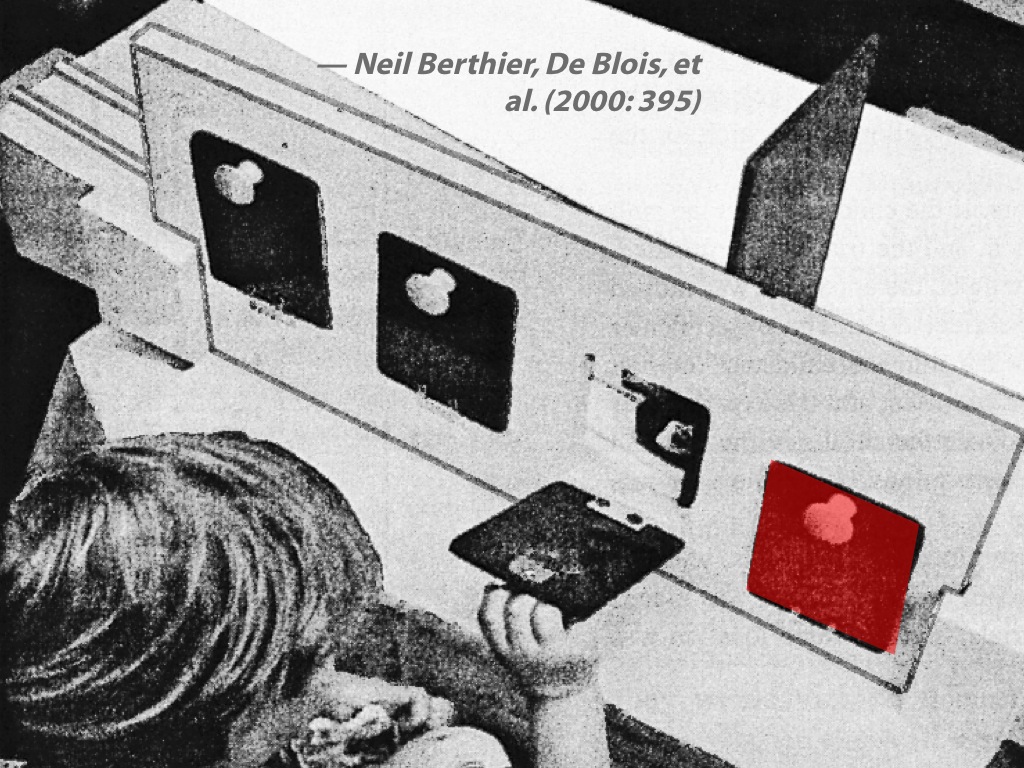

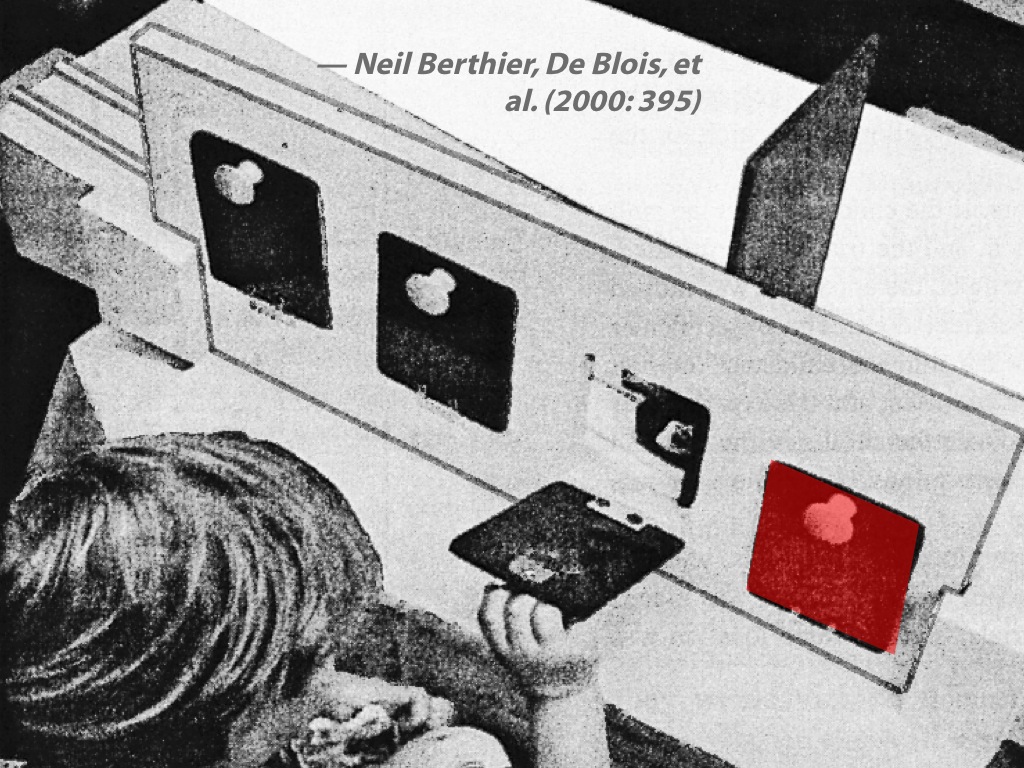

These experimenters added an anticipation prompt and measured to which box subjects looked first \citep{Clements:1994cw}.

(Actually they didn't use this story; theirs was about a mouse called Sam and some cheese, but the differences needn't concern us.)

What got me hooked philosophical psychology,

and on philosophical issues in the development of mindreading in particular

was a brilliant finding by Wendy Clements who was Josef Perner's phd student.

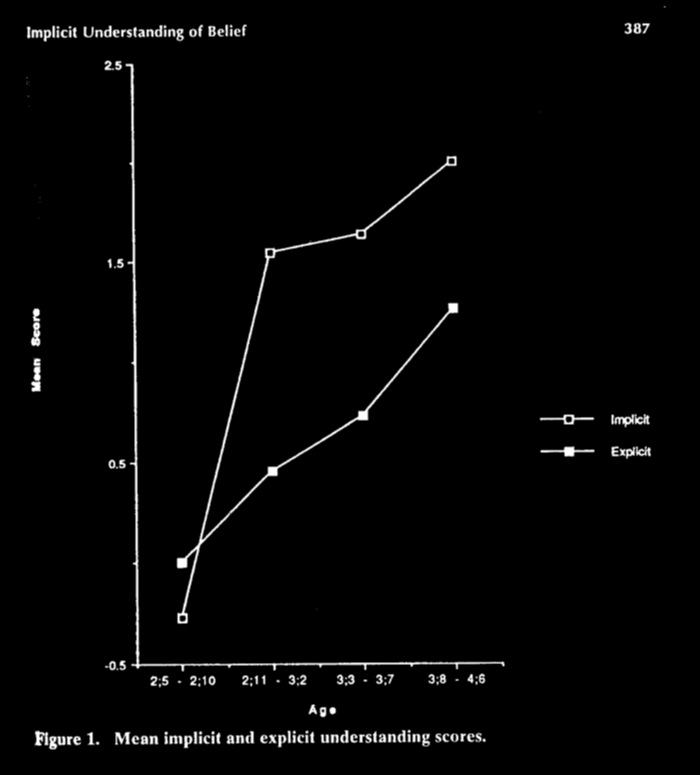

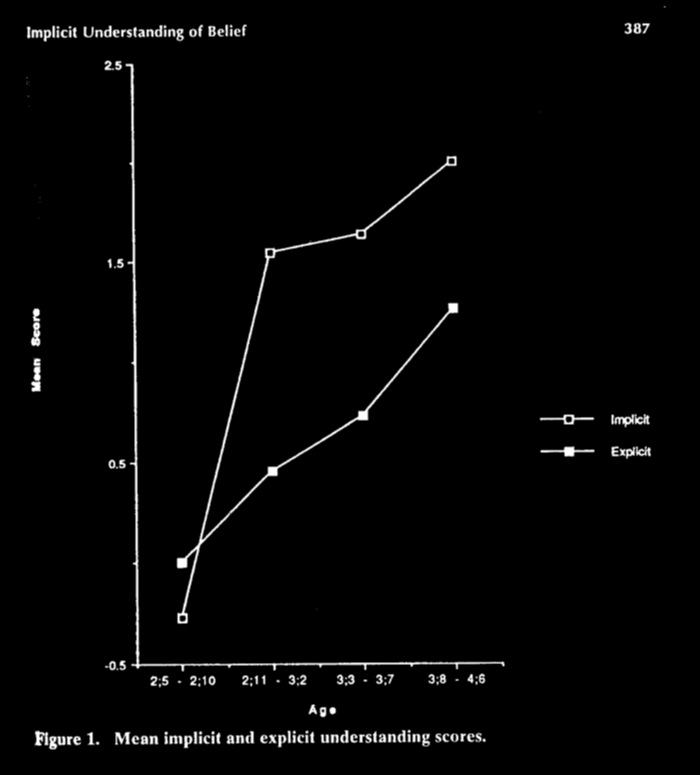

Clements & Perner 1994 figure 1

These findings were carefully confirmed \citep{Clements:2000nc,Garnham:2001ql,Ruffman:2001ng}.

Around 2000 there were a variety of findings pointing in the direction of a confict between different measures.

These included studies on word learning \citep{Carpenter:2002gc,Happe:2002sr} and false denials \citep{Polak:1999xr}.

But relatively few people were interested until ...

violation-of-expectations at 15 months of age

pointing at 18 months of age

Control experiment:

‘In experiment 3, the adult was ignorant about which of the two locations held her toy.’

Infants track others’ false beliefs from around 7 months of age.

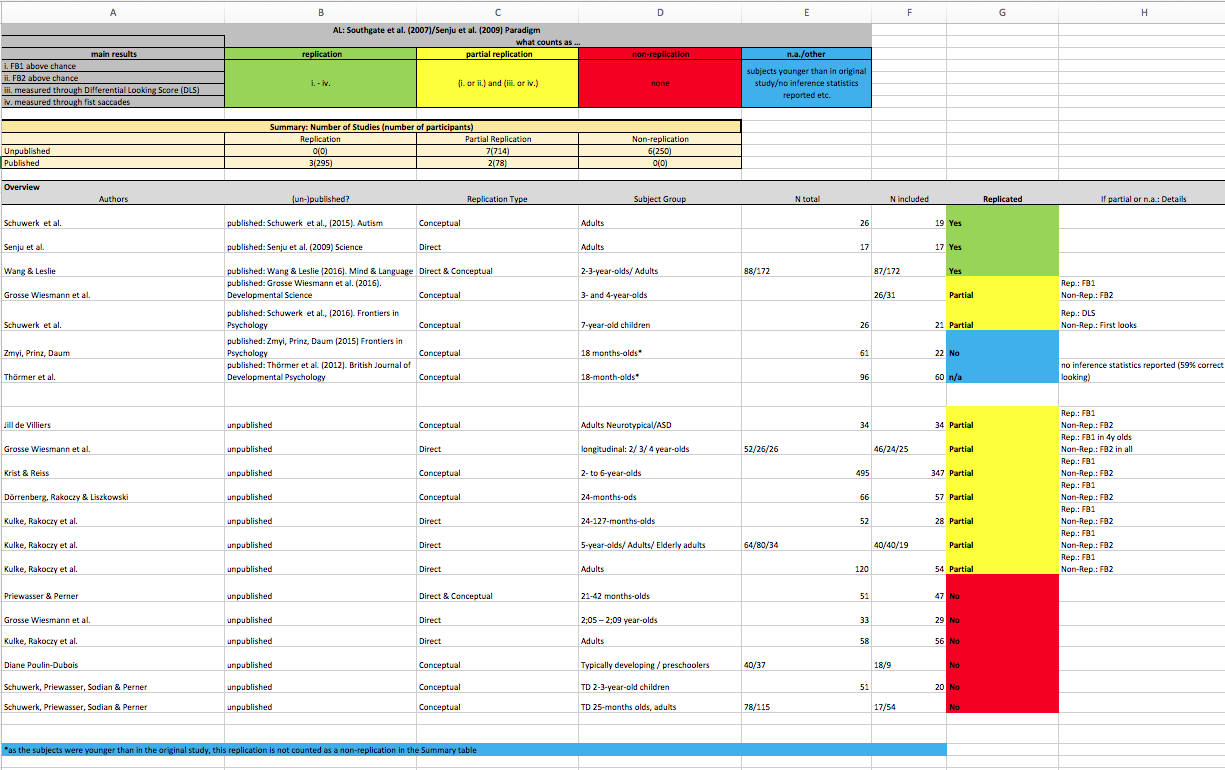

Kulka & Rakoczy, 2018

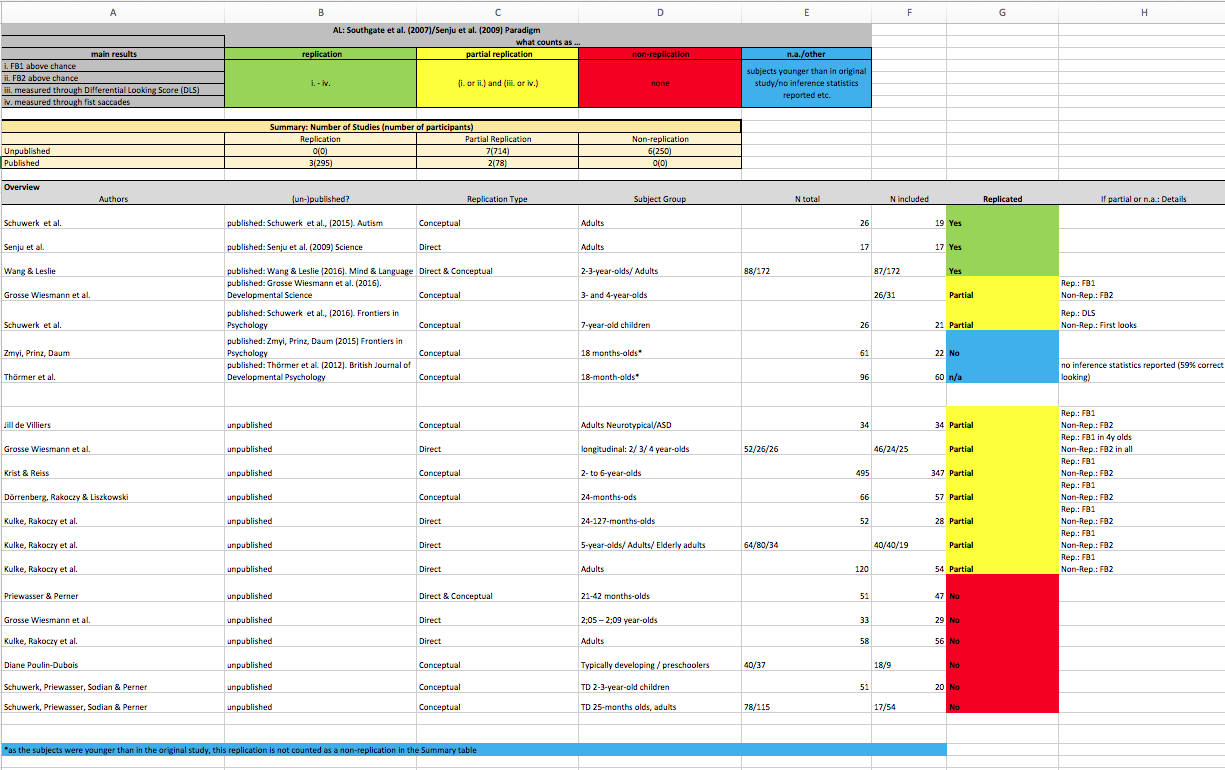

There are some problems with replicating some of the studies which provide

evidence for infant mindreading, as many of you are probably already aware.

(Here’s a fragment of a spreadsheet created by Louisa Kulka and Hannes Rakoczy.)

One response to these difficulties with replication would be to say we just cannot

theories about infants until it has been cleared up.

For my part, I think the replication issue is super significant and the fallout is likely

to be illuminating.

But I also note that plenty of infant mindreading studies have been successfully replicated. So while

we should be cautious about details (and in particular about any one type of measure or scenario),

my sense is that there is clearly an interaction between measures of mindreading and age.

Infants track others’ false beliefs from around 7 months of age.

Mindreading: a Developmental Puzzle

\section{Mindreading: a Developmental Puzzle}

An \emph{A-Task} is any false belief task that children tend to fail until around

three to five years of age.

\begin{enumerate}

\item Children fail A-tasks

because they rely on a model of minds and actions that does not incorporate beliefs.

\item Children pass non-A-tasks

by relying on a model of minds and actions that does incorporate beliefs.

\item At any time, the child has a single model of minds and actions.

\end{enumerate}

For adults (and children who can do this),

representing perceptions and beliefs as such---and even merely holding in mind

what another believes, where no inference is required---involves a measurable

processing cost \citep{apperly:2008_back,apperly:2010_limits}, consumes attention

and working memory in fully competent adults \citealp{Apperly:2009cc,

lin:2010_reflexively, McKinnon:2007rr}, may require inhibition \citep{bull:2008_role}

and makes demands on executive function \citep{apperly:2004_frontal,samson:2005_seeing}.

challenge

Explain the emergence in development

of mindreading.

The challenge is to explain the emergence, in evolution or development, of mindreading.

Initially it looked like this was going to be relatively straightforward and involve just language, social interaction and executive function.

So a Myth of Jones style story seemed viable.

But the findings of competence in infants of around one year of age changes this.

These findings tell us that not all abilities to represent others' mental states can depend on things like language.

And, as I've been stressing, these findings also create a puzzle.

The puzzle is, roughly, how to reconcile infants' competence with three-year-olds' failure.

Two models of minds and actions

Maxi wants his chocolate.

Maxi wants his chocolate.

Maxi believes his chocolate is in the blue box.

Maxi’s chocolate is in the green box.

Maxi will look in the blue box.

Maxi will look in the green box.

Let me start by taking you back to the early eighties.

(Has anyone else been enjoying Deutschland drei und achtzig?)

3-year-olds fail false belief tasks

3-year-olds fail a wide variety of tasks where they are asked about a

false belief, or asked to predict how someone with a false belief will

act, ...

prediction

- action(Wimmer & Perner 1983)

or asked to predict what someone with a false belief will desire ...

- desire(Astington & Gopnik 1991)

... or to retrodict or explain a false belief after being shown how someone acts.

retrodiction or explanation(Wimmer & Mayringer 1998)

select a suitable argument(Bartsch & London 2000)

Further,

lots of factors make no difference to 3-year-olds’ performance: they fail

tasks about other’s beliefs and they fail tasks about their own beliefs;

own beliefs (first person)(Gopnik & Slaughter 1991)

they fail when they are merely observers as well as when they are actively

involved;

involvement (deception)(Chandler et al 1989)

they fail when a verbal response is required and also when an

nonverbal communicative response or even a noncommunicative response (such

as hiding an object) is required.

nonverbal response(Call et al 1999; Krachun et al, 2010; Low 2010 exp.2)

And they fail test questions which are word-for-word identical to desire and pretence tasks

test questions word-for-word identical to desire and pretence tasks(Gopnik et al 1994; Cluster 1996)

A-tasks

An A-Task is any false belief task that children tend to fail until around

three to five years of age.

Why do children systematically fail A-tasks? There is a simple explanation ...

Children fail

because they rely on a model of minds and actions that does not incorporate beliefs

[Stress that, on this view, children do have a model of minds and actions.

It’s just that it doesn’t incorporate belief.]

Perner and others have championed the view that children who failed A-tasks

lack a metarepresentational understanding of propositional attitudes

altogether. But this view has recently (well, not that recently, it’s nearly

a decade old now) been challenged by Hannes’ discovery

that children can solve tasks which are like A-tasks but involve incompatible

desires \citep{rakoczy:2007_desire}. I think this make plausible the thought

that there is an age at which

children fail A-tasks not because they have a problem with mental states

in general, but because they have a problem with beliefs in particular.

It turns out that, in the first and second years of life, infants show abities

to track false beliefs on a variety of measures.

Infants’ false-belief tracking abilities

Violation of expectations

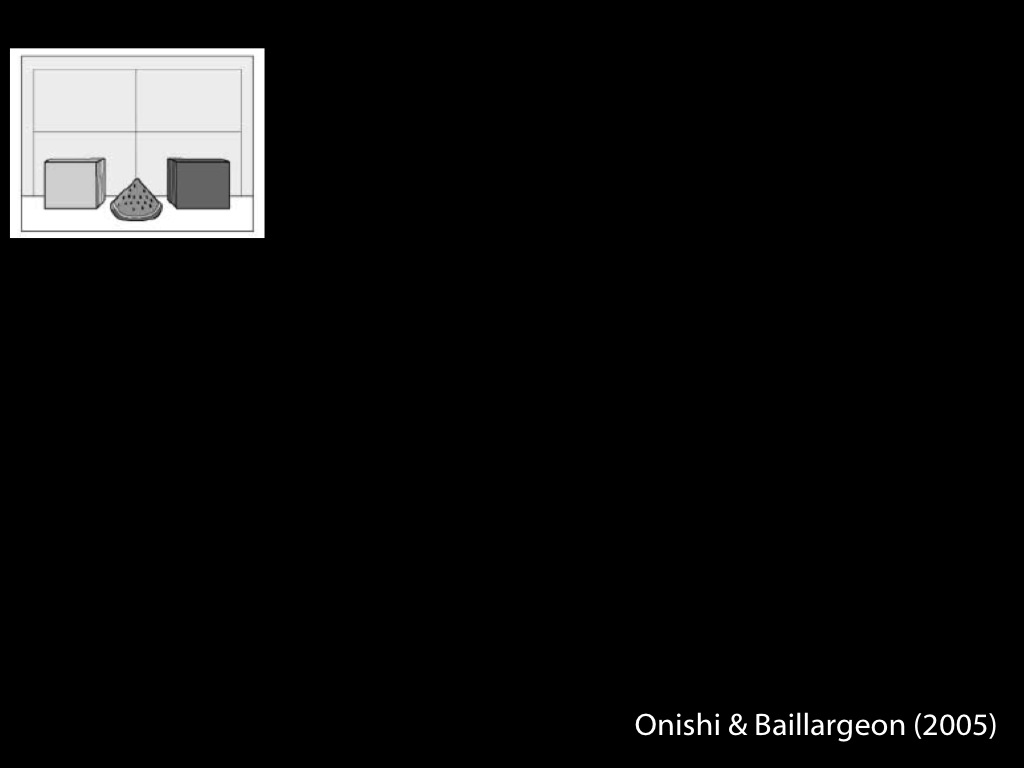

- with change of location(Onishi & Baillargeon 2005)

- with deceptive contents(He et al 2011)

- observing verbal comm.(Song et al 2008; Scott et al 2012)

Anticipating action (Clements et al 1994)

looking (Southgate et al 2007)

pointing(Knudsen & Liszkowski 2011)

Helping(Buttlemann et al 2009, 2015)

Communicating(Southgate et al 2010)

non-A-tasks

A non-A-Task is a task that is not an A-task. I was tempted to call these

B-Tasks, but that would imply that they have a unity. And whereas we know

from a meta-analysis that A-tasks do seem to measure a single underlying

competence, we don’t yet know whether all non-A-tasks measure a single thing

or whether there might be several different things.

Why do infants systematically pass non-A-tasks in the first year

or two of life? There is a simple explanation ...

Children pass

by relying on a model of minds and actions that does incorporate beliefs

And this is *almost* everything we need to generate the puzzle about development.

A-tasks

Children fail

because they rely on a model of minds and actions that does not incorporate beliefs

Children fail A-tasks

because they rely on a model of minds and actions that does not incorporate beliefs.

non-A-tasks

Children pass

by relying on a model of minds and actions that does incorporate beliefs

Children pass non-A-tasks

by relying on a model of minds and actions that does incorporate beliefs.

dogma

the

of mindreading

The dogma of mindreading (momentary version): any individual has at

most one model of minds and actions

at any one point in time.

There is also a developmental version of the dogma: the developmental dogma is that

there is either just one model or else a family of models where

one of the models, the best and most sophisticated model,

contains all of the states that are contained in

any of the models.

We’ve seen that ...

To get a contradiction we need one further ingredient.

These three claims are jointly inconsistent so one of them must be false.

Researchers disagree about which claim to reject.

But I suppose you can tell from how I’ve labelled them which one I

propose to reject.

The puzzle is a little bit like the puzzle we had in the case of knowledge

of physical objects.

But it's also different.

In the case of physical objects, the conflict was between measures

involving looking and measures involving searching.

In this case it's different, because on the infant side there is not just

looking but also acting (e.g. helping) and even communicating.

| domain | evidence for knowledge in infancy | evidence against knowledge |

| colour | categories used in learning labels & functions | failure to use colour as a dimension in ‘same as’ judgements |

| physical objects | patterns of dishabituation and anticipatory looking | unreflected in planned action (may influence online control) |

| number | --""-- | --""-- |

| syntax | anticipatory looking | [as adults] |

| minds | reflected in anticipatory looking, communication, &c | not reflected in judgements about action, desire, ... |

What is the developmental puzzle about false belief?

A-tasks

Children fail

because they rely on a model of minds and actions that does not incorporate beliefs

Children fail A-tasks

because they rely on a model of minds and actions that does not incorporate beliefs.

non-A-tasks

Children pass

by relying on a model of minds and actions that does incorporate beliefs

Children pass non-A-tasks

by relying on a model of minds and actions that does incorporate beliefs.

dogma

the

of mindreading

The dogma of mindreading (momentary version): any individual has at

most one model of minds and actions

at any one point in time.

There is also a developmental version of the dogma: the developmental dogma is that

there is either just one model or else a family of models where

one of the models, the best and most sophisticated model,

contains all of the states that are contained in

any of the models.

Sketchy Idea

Infants have core knowledge of minds and actions.

Core knowledge is sufficient for success on non-A-tasks only.

Infants lack knowledge of minds and actions.

Knowledge is necessary for success on A-tasks.

Why accept this conjecture?

So far no reason has been given at all.

And it barely makes sense. There are just so many assumptions.

All I’m really saying is that I hope this case, knowledege of minds,

will turn out to be like the other cases.

Why accept this conjecture?

And what form does the core knowledge take.

In every case so far, we have had to identify infant with adult competencies.

(Core knowledege is for life, not just for infancy.)

\section{Mindreading Chimpanzees?}

apes track beliefs

For a process to \emph{track} something is for how it unfolds to nonaccidentally depend,

perhaps within a limited but useful range of circumstances,

on facts about that thing.

(e.g. Krachun et al, 2009; Krupenye et al, 2017)

So for a process to track beliefs is for ...

I’ll often talk about an animal rather than a process tracking beliefs,

for example, recent research indicates that apes can track others’ beliefs.

*TODO* link to video!

For an animal to track beliefs is for there to be some process in the animal that tracks beliefs.

[don’t say: Once we have established that a given type of animal can track beliefs,

two questions arise, which PROCESS and which MODEL?]

Why should we care whether apes can track beliefs (and other mental states)?

Much of the interest in this question is driven by theory of mind ...

‘In saying that an individual has a theory of mind, we mean that the individual [can represent] mental states’

\citep[p.\ 515]{premack_does_1978}

Premack & Woodruff, 1978 p. 515

Have we answered Premack and Woodruff’s question?

Some suggest that we have ...

apes track beliefs ∴ they are mindreaders ?

For the purposes of this talk, I am going to assume that we can make this inference,

at least in some circumstances.

But I think there are two COMPLICATIONS which need to be addressed before we can be

confident that we understand why we can make the inference. The first is simple:

representing does not logically entail tracking ...

| track | by representing |

| toxicity | odour |

| visibility | line-of-sight |

belief | ? |

To say that someone tracks beliefs does not entail saying that she represents beliefs.

In general, you can track something by representing something else.

Note that this complication is not supposed to show that we cannot make

the inference from tracking to mindreading.

After all, such inferences are not supposed to be deductive.

Nor do I intend to suggest that we should somehow find a different inference

which is deductive.

Instead my point is simply this: as the inference is not deductive, there are bound

to be tricky questions about how the observations support conclusions about tracking.

[LATER: one further complication will be that there are multiple models of minds and actions.]

Two questions:

\begin{enumerate}

\item How do observations about tracking support conclusions about representing?

\item Why are there dissociations in nonhuman apes’, human infants’ and human adults’ performance on belief-tracking tasks?

\end{enumerate}

Q1

How do observations about tracking support conclusions about representing models?

Q2

Why are there dissociations in nonhuman apes’, human infants’ and human adults’ performance on belief-tracking tasks?

To stress: this is a genuine question. The assumption is that you can infer representing

from tracking. What we need, I think, is a clearer idea of how such inferences might succeed.

So my question is whether we should infer mindreading from tracking. I think there is a

further COMPLICATION which should hold us back from making the inference without further scrutiny ...

second complication : dissociations in performance

‘the present evidence may constitute an implicit understanding of belief’

\citep[p.~113]{krupenye:2016_great}

Krupenye et al, 2016 p. 113

| study | type | success? |

| Call et al, 1999 | object choice (coop) | fail |

| Krachun et al, 2009 | ‘chimp chess’

(competitive, action) | fail |

| Krachun et al, 2009 | ‘chimp chess’

(competitive, gaze) | pass A,

fail B |

| Krachun et al, 2010 | change of contents | fail |

| Krupenye et al, 2017 | anticipatory looking

(2 scenarios) | pass both |

Commenting on their success in showing that great apes can track false beliefs,

Krupenye et al comment that ...

Why do they say ‘implicit’?

I think it’s because they expect dissociations: just as there are dissociations among different

measures of mindreading in adults, and developmental dissociations, so it is plausible that there

will turn out to be dissociations concerning the tasks that adult humans and adult nonhumans can

pass.

Indeed, we can see signs of dissociations if we go back to earlier work with great apes by Karla

Krachun and colleagues ...

Invoking implicit cannot explain the dissociations because you could have

just as well invoked

implicit for a completely different pattern of findings.

Two questions:

\begin{enumerate}

\item How do observations about tracking support conclusions about representing?

\item Why are there dissociations in nonhuman apes’, human infants’ and human adults’ performance on belief-tracking tasks?

\end{enumerate}

Q1

How do observations about tracking support conclusions about representing models?

Q2

Why are there dissociations in nonhuman apes’, human infants’ and human adults’ performance on belief-tracking tasks?

So now we have a second question to answer

We shouldn’t draw conclusions about mindreading from tracking before

we can answer at least these two questions.

Our concern here is with the infants, but I hope the comparison with nonhumans can help us ...

‘the core theoretical problem in ... animal mindreading is that ... the conception of mindreading that dominates the field ... is too underspecified to allow effective communication among researchers’

‘the core theoretical problem in contemporary research on animal mindreading is

that ... the conception of mindreading that dominates the field

... is too underspecified to allow effective communication among researchers,

and reliable identification of evolutionary precursors of human mindreading through

observation and experiment.’

\citep[p.~321]{heyes:2014_animal}

Heyes (2015, 321)

What does Heyes mean?

Aside ...

we don’t know much about adults humans’ mindreading abilities

‘the core theoretical problem in ... animal mindreading is that ... the conception of mindreading that dominates the field ... is too underspecified to allow effective communication among researchers’

‘the core theoretical problem in contemporary research on animal mindreading is

that ... the conception of mindreading that dominates the field

... is too underspecified to allow effective communication among researchers,

and reliable identification of evolutionary precursors of human mindreading through

observation and experiment.’

\citep[p.~321]{heyes:2014_animal}

Heyes (2015, 321)

What does Heyes mean?

How can we more fully specify mindreading?

To more fully specify mindreading we need a theory that specifies

both the models and the processes involved in mindreading.

A model is a way the world could logically be, or a set of ways the world could logically be.

Some models can conveniently be specified by theories, others by equations.

(Note that a model isn’t a theory, nor is it a set of equations.)

conclusion

In conclusion, ...

challenge

Explain the emergence in development

of mindreading.

So let me conclude.

The challenge we have been addressing was to understand the emergence of

mindreading.

Initially this seemed straightforward: you learn this from social

interaction using language as a tool (compare Gopnik's theory theory).

However, the discovery that abilities to track beilefs exist in infants

from around 7 months or earlier initially suggested a different picture:

one on which mindreading was likely to involve core knowledge. But, as

always, things are not so straightforward.

A-tasks

Children fail

because they rely on a model of minds and actions that does not incorporate beliefs

Children fail A-tasks

because they rely on a model of minds and actions that does not incorporate beliefs.

non-A-tasks

Children pass

by relying on a model of minds and actions that does incorporate beliefs

Children pass non-A-tasks

by relying on a model of minds and actions that does incorporate beliefs.

dogma

the

of mindreading

The dogma of mindreading (momentary version): any individual has at

most one model of minds and actions

at any one point in time.

There is also a developmental version of the dogma: the developmental dogma is that

there is either just one model or else a family of models where

one of the models, the best and most sophisticated model,

contains all of the states that are contained in

any of the models.

Two questions:

\begin{enumerate}

\item How do observations about tracking support conclusions about representing?

\item Why are there dissociations in nonhuman apes’, human infants’ and human adults’ performance on belief-tracking tasks?

\end{enumerate}

Q1

How do observations about tracking support conclusions about representing models?

Q2

Why are there dissociations in nonhuman apes’, human infants’ and human adults’ performance on belief-tracking tasks?

way forward? models & processes