Click here and press the right key for the next slide (or swipe left)

also ...

Press the left key to go backwards (or swipe right)

Press n to toggle whether notes are shown (or add '?notes' to the url before the #)

Press m or double tap to slide thumbnails (menu)

Press ? at any time to show the keyboard shortcuts

The problems with core knowledge look like they might be

the sort of problem a philospoher might be able to help with.

Jerry Fodor has written a book called 'Modularity of Mind' about what he calls modules.

And modules look a bit like core systems, as I'll explain.

Further, Spelke herself has at one point made a connection.

So let's have a look at the notion of modularity and see if that will help us.

core system = module?

‘In Fodor’s (1983) terms, visual tracking and preferential looking each may depend on modular mechanisms.’

\citep[p.\ 137]{spelke:1995_spatiotemporal}

Spelke et al 1995, p. 137

So what is a modular mechanism?

\subsection{Modularity}

Fodor’s three claims about modules:

\begin{enumerate}

\item they are ‘the psychological systems whose operations present the world to thought’;

\item they ‘constitute a natural kind’; and

\item there is ‘a cluster of properties that they have in common’ \citep[p.\ 101]{Fodor:1983dg}.

\end{enumerate}

Modules

- they are ‘the psychological systems whose operations present the world to thought’;

- they ‘constitute a natural kind’; and

- there is ‘a cluster of properties that they have in common … [they are] domain-specific computational systems characterized by informational encapsulation, high-speed, restricted access, neural specificity, and the rest’ (Fodor 1983: 101)

Modules are widely held to play a central role in explaining mental development and in accounts of the mind generally.

Jerry Fodor makes three claims about modules:

What are these properties?

Properties of modules:

\begin{itemize}

\item domain specificity (modules deal with ‘eccentric’ bodies of knowledge)

\item limited accessibility (representations in modules are not usually inferentially integrated with knowledge)

\item information encapsulation (roughly, modules are unaffected by general knowledge or representations in other modules)

\item innateness (roughly, the information and operations of a module not straightforwardly consequences of learning; but see \citet{Samuels:2004ho}).

\end{itemize}

- domain specificity

modules deal with ‘eccentric’ bodies of knowledge

- limited accessibility

representations in modules are not usually inferentially integrated with knowledge

- information encapsulation

roughly, modules are unaffected by general knowledge or representations in other modules

For something to be informationally encapsulated is, roughly, for its operation to be unaffected by the mere existence of general knowledge or representations stored in other modules (Fodor 1998b: 127)

- innateness

roughly, the information and operations of a module not straightforwardly consequences of learning

Domain specificity

Let me illustrate limited accessibility ...

Limited accessbility is a familar feature of many cognitive systems.

When you grasp an object with a precision grip, it turns out that there is a very

reliable pattern.

At a certain point in moving towards it your fingers will reach a maximum grip aperture

which is normally a certain amount wider than the object to be grasped, and then start to close.

Now there's no physiological reason why grasping should work like this, rather than grip hand

closing only once you contact the object.

Maximum grip aperture shows anticipation of the object: the mechanism responsible for

guiding your action does so by representing various things including some features of

the object.

But we ordinarily have no idea about this.

The discovery of how grasping is controlled depended on high speed photography.

This is an illustration of limited accessibility.

(This can also illustrate information encapsulation and domain specificity.)

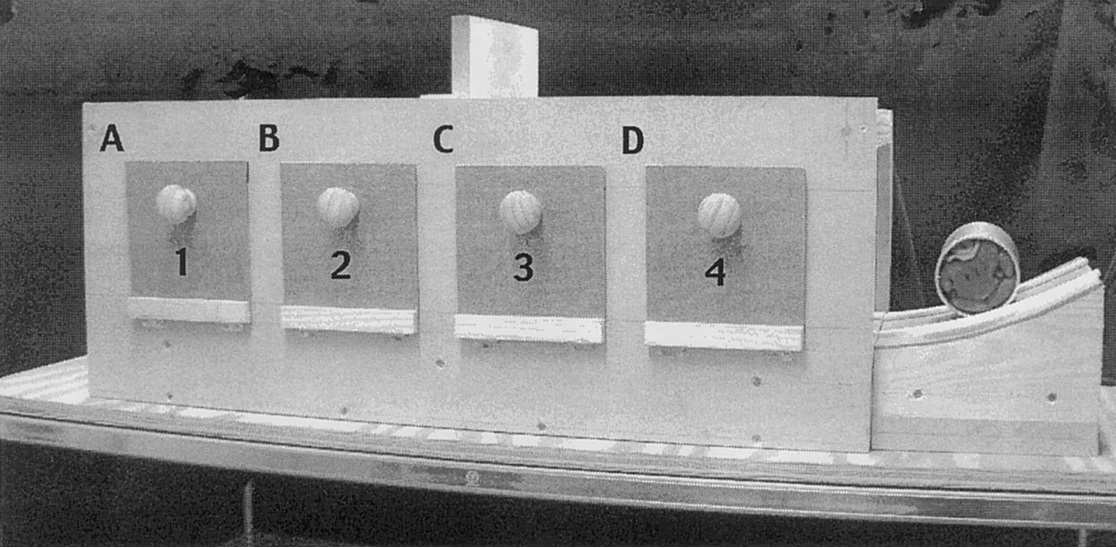

maximum grip aperture

(source: Jeannerod 2009, figure 10.1)

Glover (2002, figure 1a)

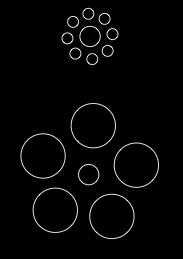

Illusion sometimes affects perceptual judgements but not actions:

information is in the system;

information is not available to knowledge \citep{glover:2002_visual}.

See further \citet{bruno:2009_when}:

They argue that Glover & Dixon's model \citep{glover:2002_dynamic} is

incorrect, at least for grasping (pointing is a different story), because

it predicts that the presence or absence of visual information during

grasping shouldn't matter. But it does.

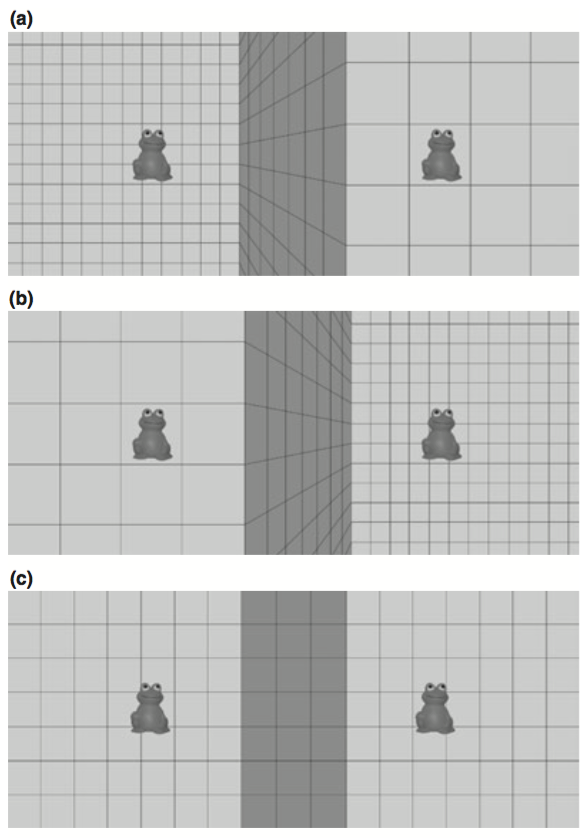

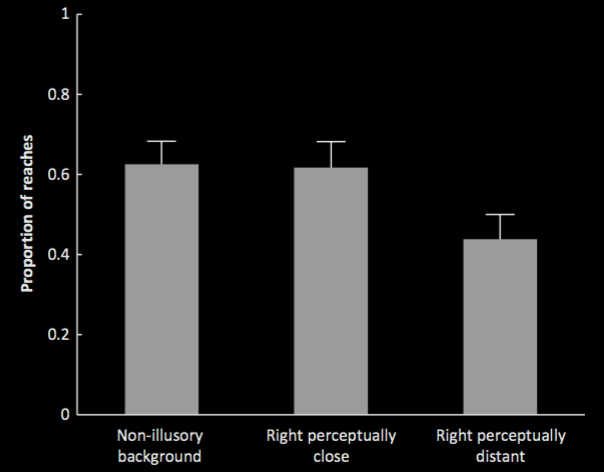

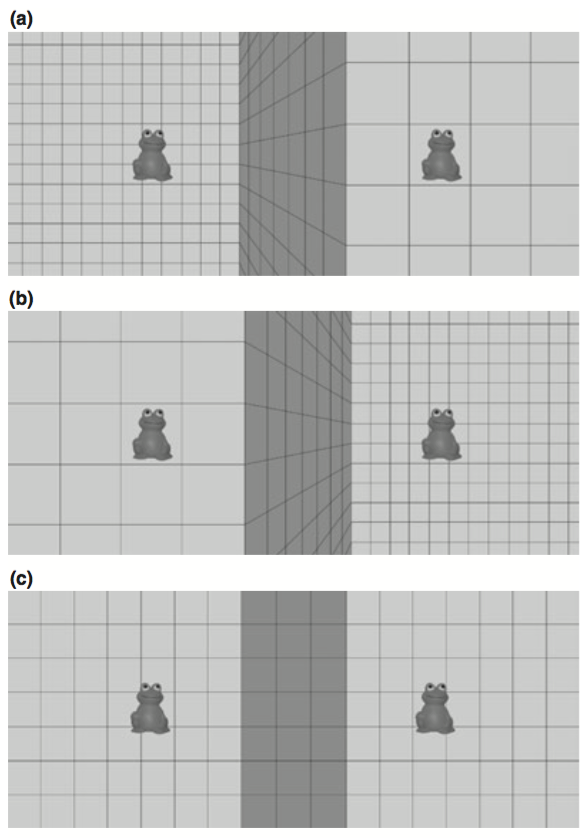

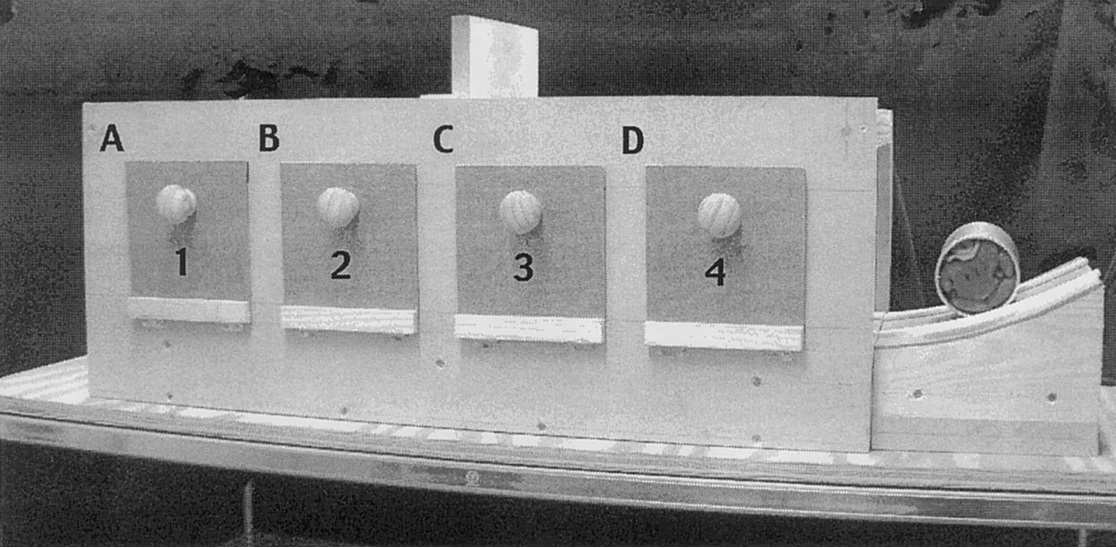

van Wermeskerken et al 2013, figure 1

You also get evidence for information encapsulation in four month olds.

To illustrate, consider \citet{vanwermeskerken:2013_getting} ...

A Ponzo-like background can make one frog further away than the other.

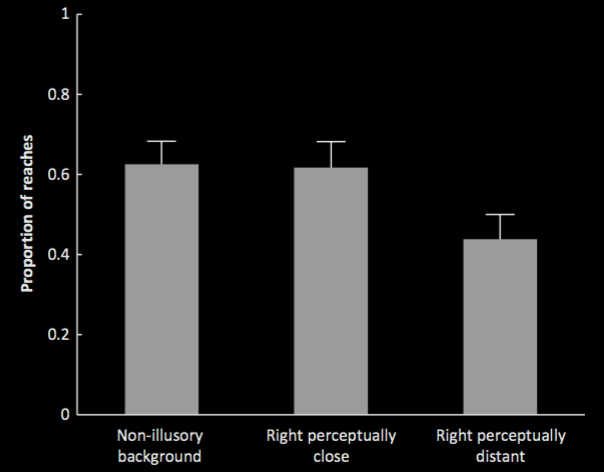

van Wermeskerken et al 2013, figure 2

This affects which object four-month olds reach for,

but does not affect the kinematics of their reaching actions.

What are these properties?

- domain specificity

modules deal with ‘eccentric’ bodies of knowledge

- limited accessibility

representations in modules are not usually inferentially integrated with knowledge

- information encapsulation

roughly, modules are unaffected by general knowledge or representations in other modules

For something to be informationally encapsulated is, roughly, for its operation to be unaffected by the mere existence of general knowledge or representations stored in other modules (Fodor 1998b: 127)

- innateness

roughly, the information and operations of a module not straightforwardly consequences of learning

Information encapsulation

So these are the key properties associated with modularity.

We've seen something like this list of properties before ...

Compare the notion of a core system with the notion of a module

The two definitions are different, but the differences are subtle enough that we don't want both.

My recommendation: if you want a better definition of core system, adopt

core system = module as a working assumption and then look to research on modularity

because there's more of it.

‘core systems are

- largely innate,

- encapsulated, and

- unchanging,

- arising from phylogenetically old systems

- built upon the output of innate perceptual analyzers’

(Carey and Spelke 1996: 520)

Modules are ‘the psychological systems whose operations present the world to thought’; they ‘constitute a natural kind’; and

there is ‘a cluster of properties that they have in common’

- innateness

- information encapsulation

- domain specificity

- limited accessibility

- ...

core system = module ?

I think it is reasonable to identify core systems with modules and to

largely ignore what different people say in introducing these ideas.

The theory is not strong enough to support lots of distinctions.

Will the notion of modularity help us in meeting the objections to the Core Knowledge View?

Recall that the challenges were these:

- multiple definitions

- justification for definition by list-of-features

- definition by list-of-features rules out explanation

- mismatch of definition to application

Let’s go back and see what Fodor says about modules again ...

Consider the first objection, that there are multiple defintions ...

Not all researchers agree about the properties of modules. That they are

informationally encapsulated is denied by Dan Sperber and Deirdre Wilson (2002: 9),

Simon Baron-Cohen (1995) and some evolutionary psychologists (Buller and Hardcastle 2000: 309),

whereas Scholl and Leslie claim that information encapsulation is the essence of modularity

and that any other properties modules have follow from this one (1999b: 133; this also seems

to fit what David Marr had in mind, e.g. Marr 1982: 100-1). According to Max Coltheart,

the key to modularity is not information encapsulation but domain specificity; he suggests

Fodor should have defined a module simply as 'a cognitive system whose application is domain

specific' (1999: 118). Peter Carruthers, on the other hand, denies that domain specificity

is a feature of all modules (2006: 6). Fodor stipulated that modules are

'innately specified' (1983: 37, 119), and some theorists assume that modules,

if they exist, must be innate in the sense of being implemented by neural regions

whose structures are genetically specified (e.g. de Haan, Humphreys and Johnson 2002: 207;

Tanaka and Gauthier 1997: 85); others hold that innateness is 'orthogonal' to modularity

(Karmiloff-Smith 2006: 568). There is also debate over how to understand individual

properties modules might have (e.g. Hirschfeld and Gelman 1994 on the meanings of domain

specificity; Samuels 2004 on innateness).

In short, then, theorists invoke many different notions of modularity, each barely different

from others. You might think this is just a terminological issue. I want to argue that

there is a substantial problem: we currently lack any theoretically viable account of what

modules are. The problem is not that 'module' is used to mean different things-after all,

there might be different kinds of module. The problem is that none of its various meanings

have been characterised rigorously enough. All of the theorists mentioned above except Fodor

characterise notions of modularity by stipulating one or more properties their kind of module

is supposed to have. This way of explicating notions of modularity fails to support principled

ways of resolving controversy.

No key explanatory notion can be adequately characterised by listing properties because the

explanatory power of any notion depends in part on there being something which unifies its

properties and merely listing properties says nothing about why they cluster together.

So much the same objections which applied to the very notion of core knowledge

appear to recur for module. But note one interesting detail ...

Modules

- they are ‘the psychological systems whose operations present the world to thought’;

- they ‘constitute a natural kind’; and

- there is ‘a cluster of properties that they have in common … [they are] domain-specific computational systems characterized by informational encapsulation, high-speed, restricted access, neural specificity, and the rest’ (Fodor 1983: 101)

Will the notion of modularity help us in meeting the objections to the Core Knowledge View?

- multiple definitions

- justification for definition by list-of-features

- definition by list-of-features rules out explanation

- mismatch of definition to application

We’ve been considering the first objection, that there are multiple defintions ...

What about the objection that picking out a set of features is unjustified ...

Modules

- they are ‘the psychological systems whose operations present the world to thought’;

- they ‘constitute a natural kind’; and

- there is ‘a cluster of properties that they have in common … [they are] domain-specific computational systems characterized by informational encapsulation, high-speed, restricted access, neural specificity, and the rest’ (Fodor 1983: 101)

Interestingly, Fodor doesn't define modules by specifying a cluster of properties

(pace Sperber 2001: 51); he mentions the properties only as a way of gesturing towards

the phenomenon (Fodor 1983: 37) and he also says that modules constitute a natural kind

(see Fodor 1983: 101 quoted above).

Will the notion of modularity help us in meeting the objections to the Core Knowledge View?

- multiple definitions

- justification for definition by list-of-features

- definition by list-of-features rules out explanation

- mismatch of definition to application

We’ve been considering the first objection, that there are multiple defintions ...

Same point applies to the claim that defining module by listing features is

unexplanatory: if we are not listing features but identifying a natural kind,

then the objection doesn’t quite get strarted.

As far as the

‘justification for definition by list-of-features’ and

‘definition by list-of-features rules out explanation’ problems go,

everything rests on the idea that modules are a natural kind.

I think this idea deserves careful scruitiny but as far as I know there's

only one paper on this topic, which is by me.

I'm not going to talk about the paper here; let me leave it like this:

if you want to invoke a notion of core knowledge or modularity,

you have to reply to these problems. And one way to reply to them---

the only way I know---is to develop the idea that modules are a natural

kind. If you want to know more ask me for my paper and I'll send it to you.

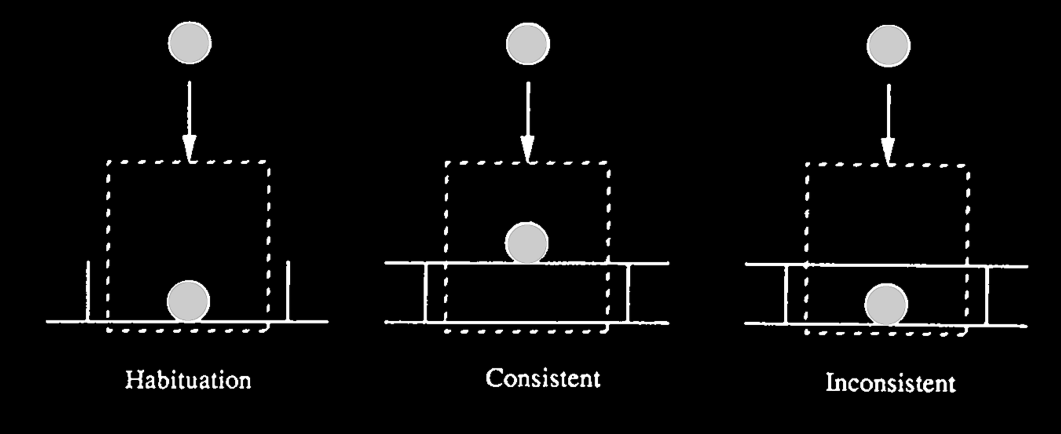

Recall the discrepancy in looking vs search measures.

What property of modules could help us to

explain it?

Spelke et al 1992, figure 2

Hood et al 2003, figure 1

| | occlusion | endarkening |

| violation-of-expectations | ✔ | ✘ |

| manual search | ✘ | ✔ |

Charles & Rivera (2009)

- domain specificity

modules deal with ‘eccentric’ bodies of knowledge

- limited accessibility

representations in modules are not usually inferentially integrated with knowledge

- information encapsulation

roughly, modules are unaffected by general knowledge or representations in other modules

For something to be informationally encapsulated is, roughly, for its operation to be unaffected by the mere existence of general knowledge or representations stored in other modules (Fodor 1998b: 127)

- innateness

roughly, the information and operations of a module not straightforwardly consequences of learning

We already considered innateness and inforamtion encapsulation

To say that a system or module exhibits limited accessibility is to say

that the representations in the system are not usually inferentially

integrated with knowledge.

This is a key feature we need to assign to modular

representations (=core knowledge) in order to explain the apparent

discrepancies in the findings about when knowledge emerges in development.

Limited accessibility explains why the representations might drive

some actions (e.g. certain looking behaviours) but not others (e.g.

certain searching actions).

But't the bare appeal to limited accessibility leaves open why the

looking and not the searching (rather than conversely).

Further, we have to explain why not searching with occlusion

whereas not looking with endarkening.

Clearly we can’t explain this pattern just by invoking information

encapsulation.

And, of course, to say that we can explain something by invoking

information encapsulation is too much.

After all, limited accessibility is more or less what we're trying to explain.

But this is the first problem --- the problem with the standard way of

characterising modularity and core systems merely by listing features.

core system = module

Some, not all, objections to the Core Knowledge View overcome:

- multiple definitions

- justification for definition by list-of-features

- definition by list-of-features rules out explanation

- mismatch of definition to application

The Core Knowledge View

generates

no

relevant predictions.

The main objection is unresolved